Integrate with other orchestration tools

Alongside dbt, discover other ways to schedule and run your dbt jobs with the help of tools such as the ones described on this page.

Build and install these tools to automate your data workflows, trigger dbt jobs (including those hosted on dbt), and enjoy a hassle-free experience, saving time and increasing efficiency.

Airflow

If your organization uses Airflow, there are a number of ways you can run your dbt jobs, including:

- dbt platform

- dbt Core

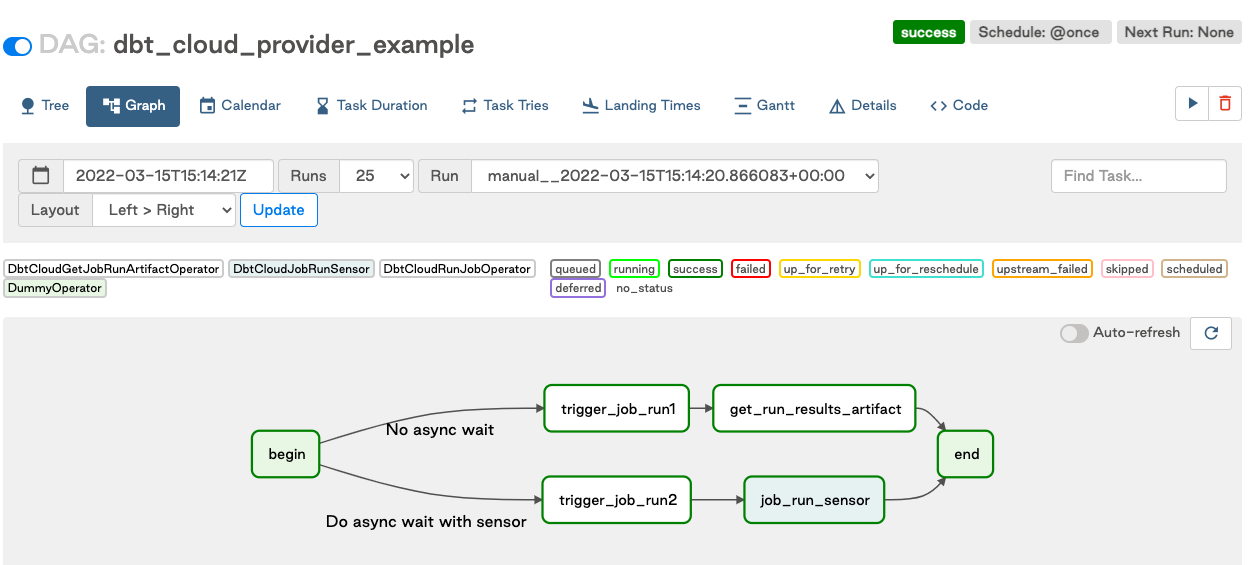

Installing the dbt Provider to orchestrate dbt jobs. This package contains multiple Hooks, Operators, and Sensors to complete various actions within dbt.

Invoking dbt Core jobs through the BashOperator. In this case, be sure to install dbt into a virtual environment to avoid issues with conflicting dependencies between Airflow and dbt.

For more details on both of these methods, including example implementations, check out this guide.

Automation servers

Automation servers (such as CodeDeploy, GitLab CI/CD (video), Bamboo and Jenkins) can be used to schedule bash commands for dbt. They also provide a UI to view logging to the command line, and integrate with your git repository.

Azure Data Factory

Integrate dbt and Azure Data Factory (ADF) for a smooth data process from data ingestion to data transformation. You can seamlessly trigger dbt jobs upon completion of ingestion jobs by using the dbt API in ADF.

The following video provides you with a detailed overview of how to trigger a dbt job via the API in Azure Data Factory.

To use the dbt API to trigger a job in dbt through ADF:

- In dbt, go to the job settings of the daily production job and turn off the scheduled run in the Trigger section.

- You'll want to create a pipeline in ADF to trigger a dbt job.

- Securely fetch the dbt service token from a key vault in ADF, using a web call as the first step in the pipeline.

- Set the parameters in the pipeline, including the dbt account ID and job ID, as well as the name of the key vault and secret that contains the service token.

- You can find the dbt job and account id in the URL, for example, if your URL is

https://YOUR_ACCESS_URL/deploy/88888/projects/678910/jobs/123456, the account ID is 88888 and the job ID is 123456

- You can find the dbt job and account id in the URL, for example, if your URL is

- Trigger the pipeline in ADF to start the dbt job and monitor the status of the dbt job in ADF.

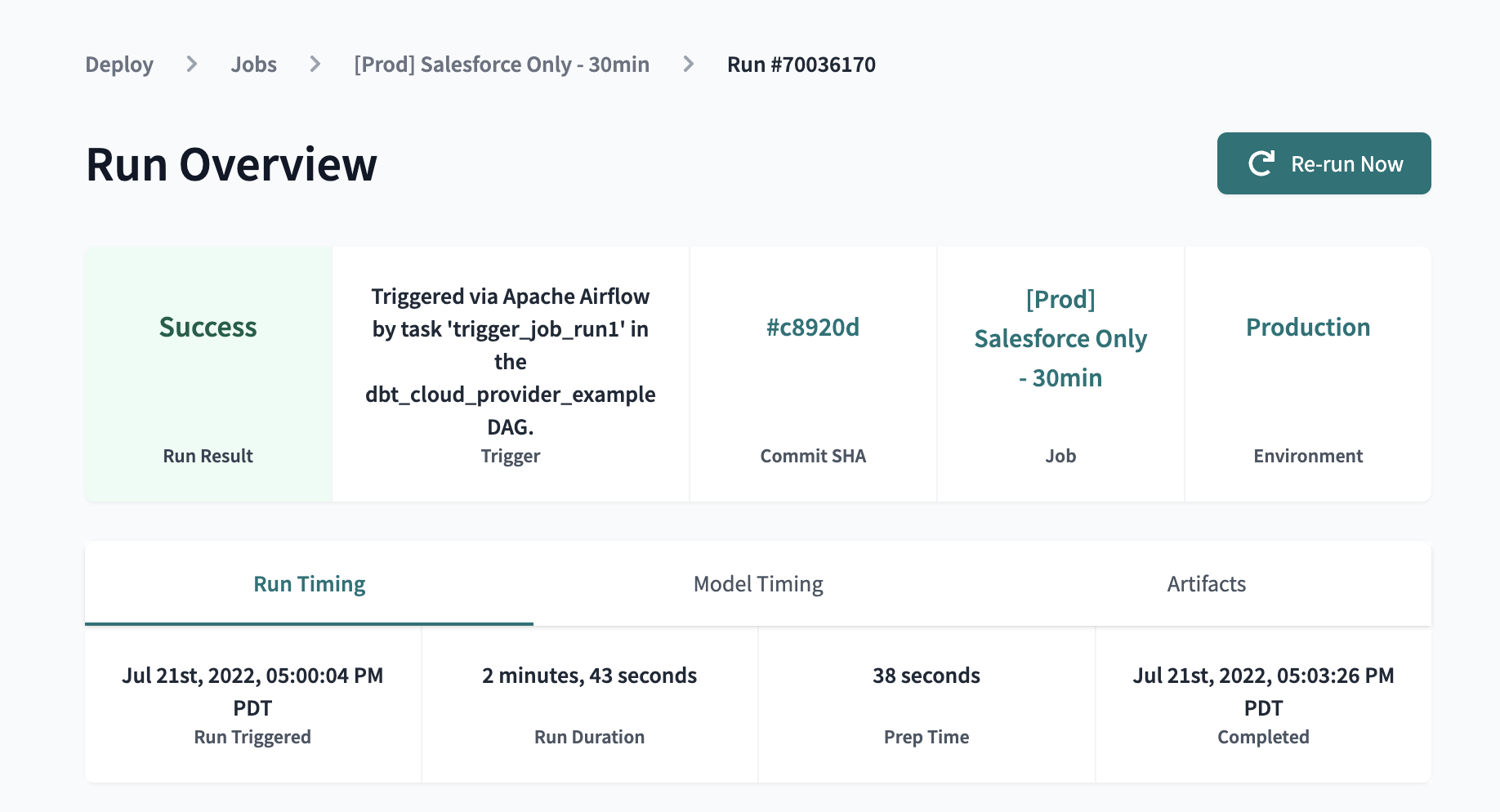

- In dbt, you can check the status of the job and how it was triggered in dbt.

Cron

Cron is a decent way to schedule bash commands. However, while it may seem like an easy route to schedule a job, writing code to take care of all of the additional features associated with a production deployment often makes this route more complex compared to other options listed here.

Dagster

If your organization uses Dagster, you can use the dagster_dbt library to integrate dbt commands into your pipelines. This library supports the execution of dbt through dbt or dbt Core. Running dbt from Dagster automatically aggregates metadata about your dbt runs. Refer to the example pipeline for details.

Databricks workflows

Use Databricks workflows to call the dbt job API, which has several benefits such as integration with other ETL processes, utilizing dbt job features, separation of concerns, and custom job triggering based on custom conditions or logic. These advantages lead to more modularity, efficient debugging, and flexibility in scheduling dbt jobs.

For more info, refer to the guide on Databricks workflows and dbt jobs.

Kestra

If your organization uses Kestra, you can leverage the dbt plugin to orchestrate dbt and dbt Core jobs. Kestra's user interface (UI) has built-in Blueprints, providing ready-to-use workflows. Navigate to the Blueprints page in the left navigation menu and select the dbt tag to find several examples of scheduling dbt Core commands and dbt jobs as part of your data pipelines. After each scheduled or ad-hoc workflow execution, the Outputs tab in the Kestra UI allows you to download and preview all dbt build artifacts. The Gantt and Topology view additionally render the metadata to visualize dependencies and runtimes of your dbt models and tests. The dbt task provides convenient links to easily navigate between Kestra and dbt UI.

Orchestra

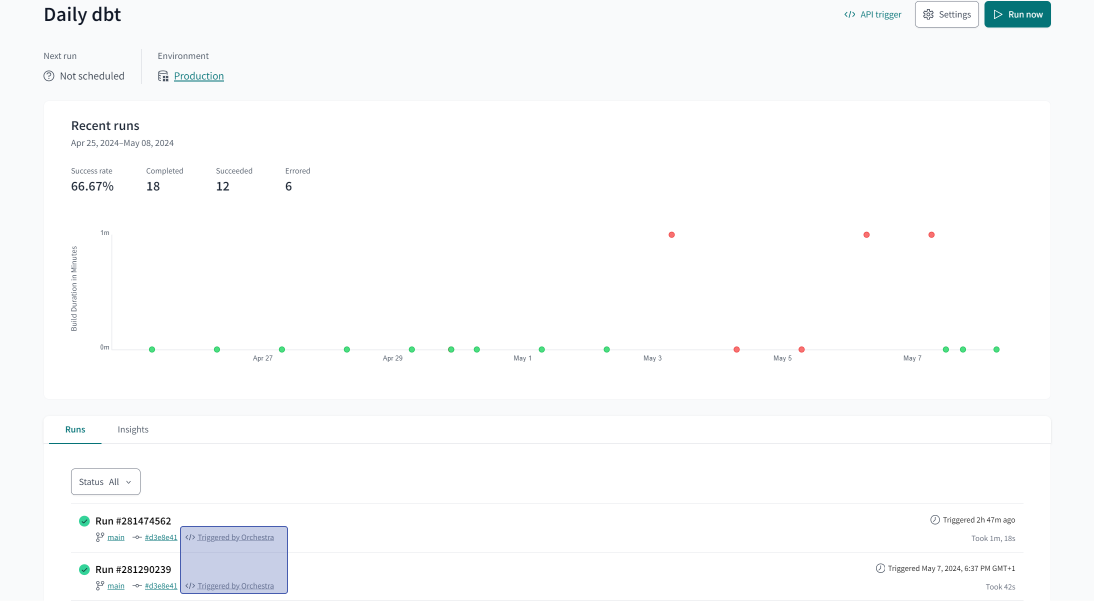

If your organization uses Orchestra, you can trigger dbt jobs using the dbt API. Create an API token from your dbt account and use this to authenticate Orchestra in the Orchestra Portal. For details, refer to the Orchestra docs on dbt.

Orchestra automatically collects metadata from your runs so you can view your dbt jobs in the context of the rest of your data stack.

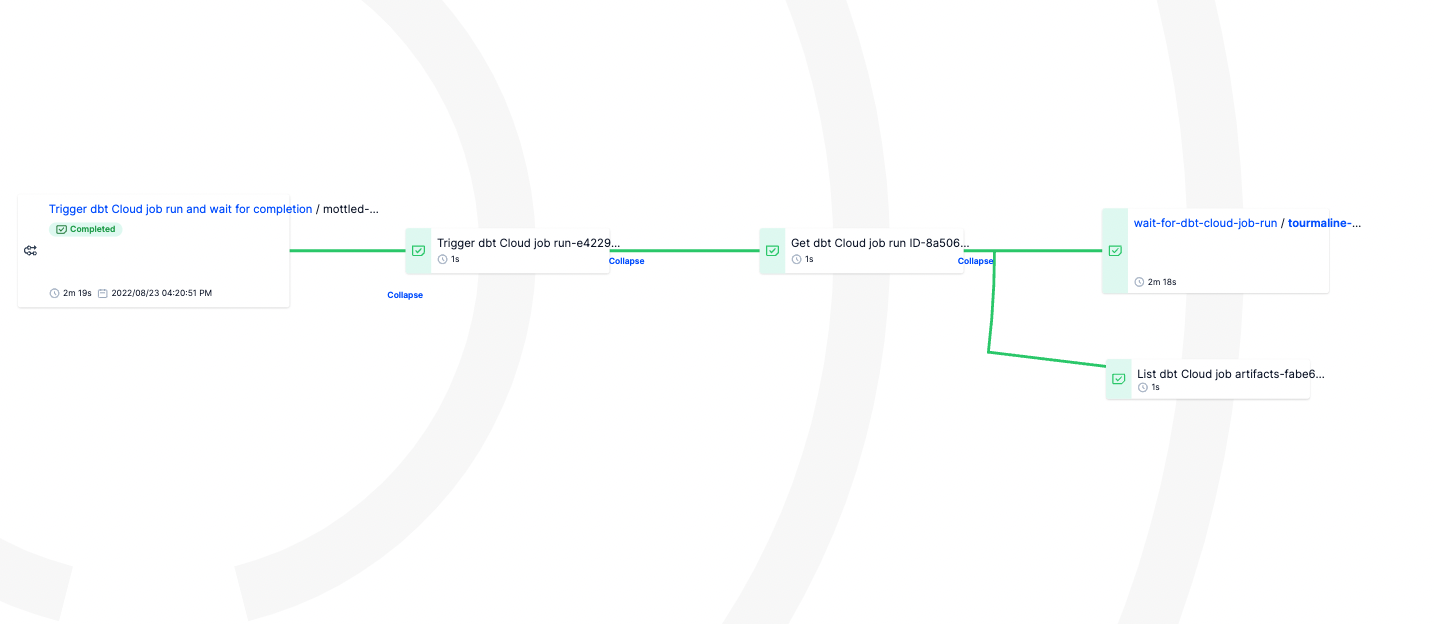

The following is an example of the run details in dbt for a job triggered by Orchestra:

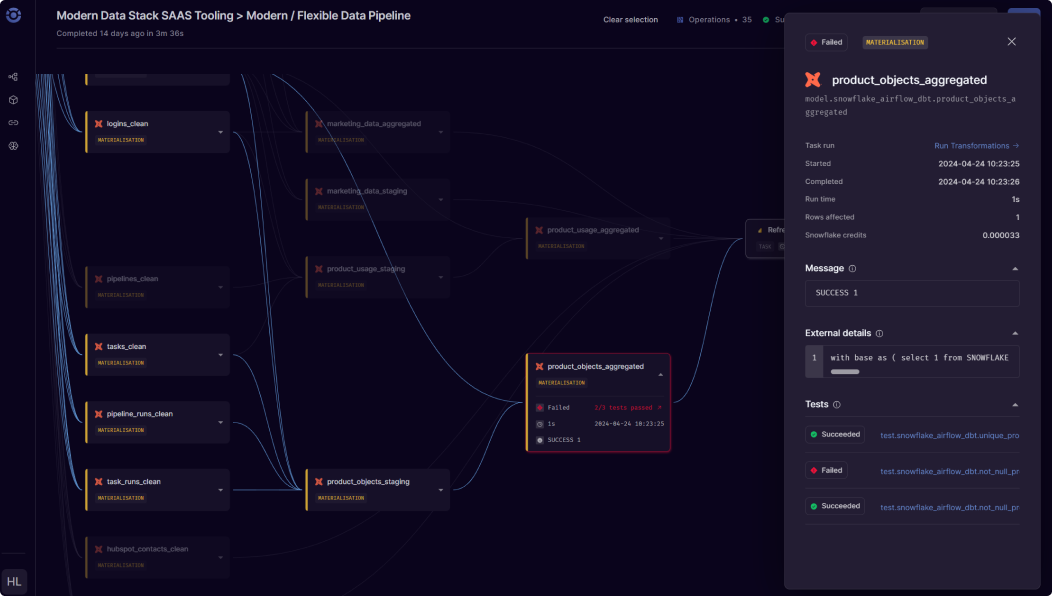

The following is an example of viewing lineage in Orchestra for dbt jobs:

Prefect

If your organization uses Prefect, the way you will run your jobs depends on the dbt version you're on, and whether you're orchestrating dbt or dbt Core jobs. Refer to the following variety of options:

Prefect 2

- dbt platform

- dbt Core

- Use the trigger_dbt_cloud_job_run_and_wait_for_completion flow.

- As jobs are executing, you can poll dbt to see whether or not the job completes without failures, through the Prefect user interface (UI).

- Use the trigger_dbt_cli_command task.

- For details on both of these methods, see prefect-dbt docs.

Prefect 1

- dbt platform

- dbt Core

- Trigger dbt jobs with the DbtCloudRunJob task.

- Running this task will generate a markdown artifact viewable in the Prefect UI.

- The artifact will contain links to the dbt artifacts generated as a result of the job run.

- Use the DbtShellTask to schedule, execute, and monitor your dbt runs.

- Use the supported ShellTask to execute dbt commands through the shell.

Related docs

- dbt plans and pricing

- Quickstart guides

- Webhooks for your jobs

- Orchestration guides

- Commands for your production deployment

Was this page helpful?

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.