Continuous integration in dbt

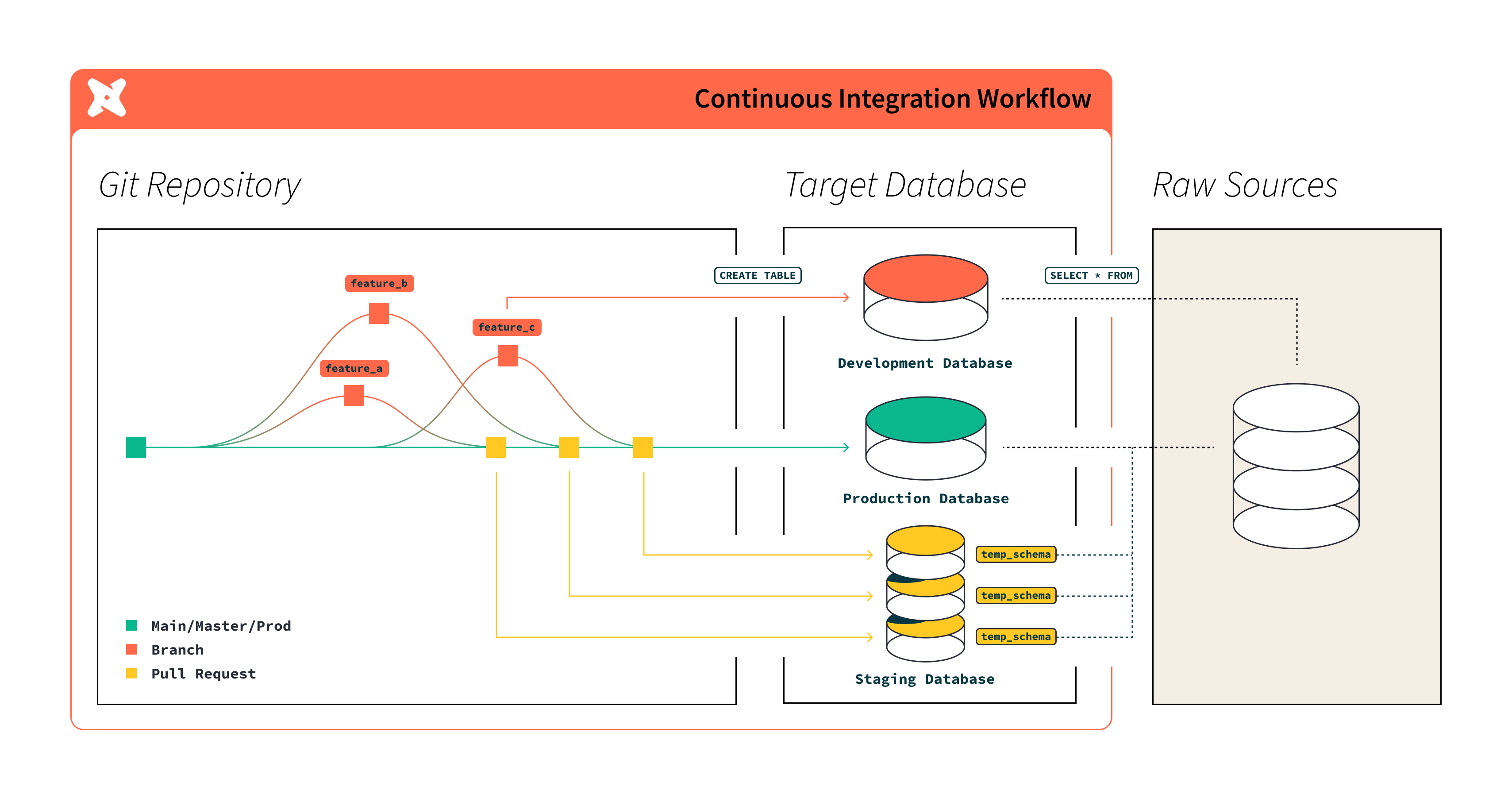

To implement a continuous integration (CI) workflow in dbt, you can set up automation that tests code changes by running CI jobs before merging to production. dbt tracks the state of what’s running in your production environment so, when you run a CI job, only the modified data assets in your pull request (PR) and their downstream dependencies are built and tested in a staging schema. You can also view the status of the CI checks (tests) directly from within the PR; this information is posted to your Git provider as soon as a CI job completes. Additionally, you can enable settings in your Git provider that allow PRs only with successful CI checks to be approved for merging.

Using CI helps:

- Provide increased confidence and assurances that project changes will work as expected in production.

- Reduce the time it takes to push code changes to production, through build and test automation, leading to better business outcomes.

- Allow organizations to make code changes in a standardized and governed way that ensures code quality without sacrificing speed.

How CI works

When you set up CI jobs, dbt listens for a notification from your Git provider indicating that a new PR has been opened or updated with new commits. When dbt receives one of these notifications, it enqueues a new run of the CI job.

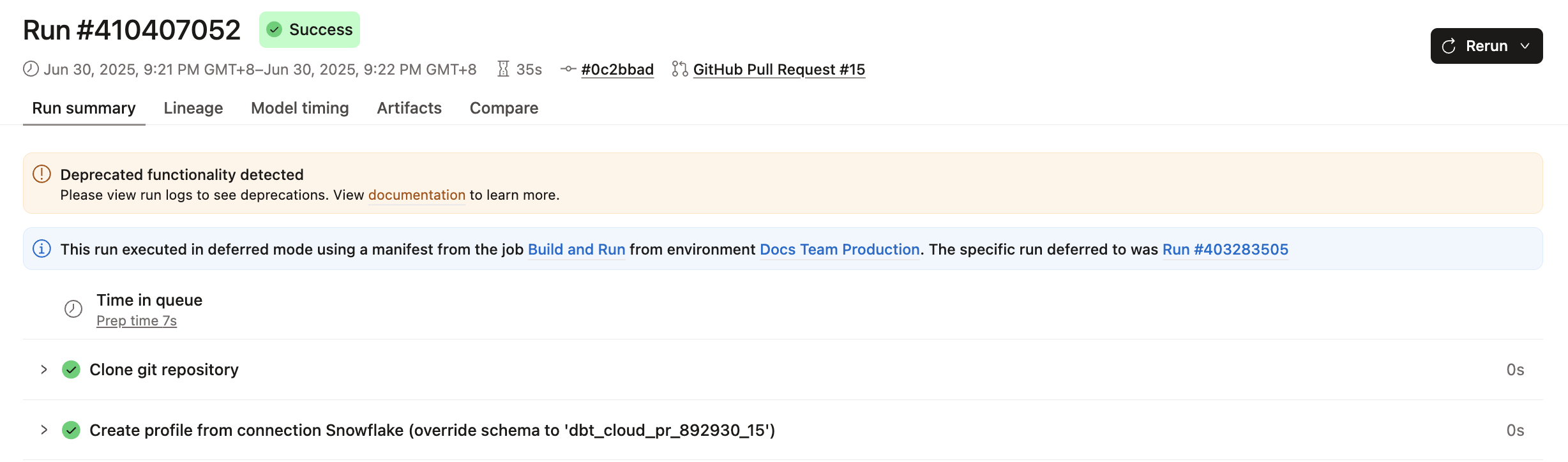

dbt builds and tests models, semantic models, metrics, and saved queries affected by the code change in a temporary schema, unique to the PR. This process ensures that the code builds without error and that it matches the expectations as defined by the project's dbt tests. The unique schema name follows the naming convention dbt_cloud_pr_<job_id>_<pr_id> (for example, dbt_cloud_pr_1862_1704) and can be found in the run details for the given run, as shown in the following image:

When the CI run completes, you can view the run status directly from within the pull request. dbt updates the pull request in GitHub, GitLab, or Azure DevOps with a status message indicating the results of the run. The status message states whether the models and tests ran successfully or not.

dbt deletes the temporary schema from your data warehouse when you close or merge the pull request. If your project has schema customization using the generate_schema_name macro, dbt might not drop the temporary schema from your data warehouse. For more information, refer to Troubleshooting.

Availability of features by Git provider

-

If your git provider has a native dbt integration, you can seamlessly set up continuous integration (CI) jobs directly within dbt.

-

For providers without native integration, you can still use the Git clone method to import your git URL and leverage the dbt Administrative API to trigger a CI job to run.

The following table outlines the available integration options and their corresponding capabilities.

| Loading table... |

Differences between CI jobs and other deployment jobs

The dbt scheduler executes CI jobs differently from other deployment jobs in these important ways:

- Concurrent CI checks — CI runs triggered by the same dbt CI job execute concurrently (in parallel), when appropriate.

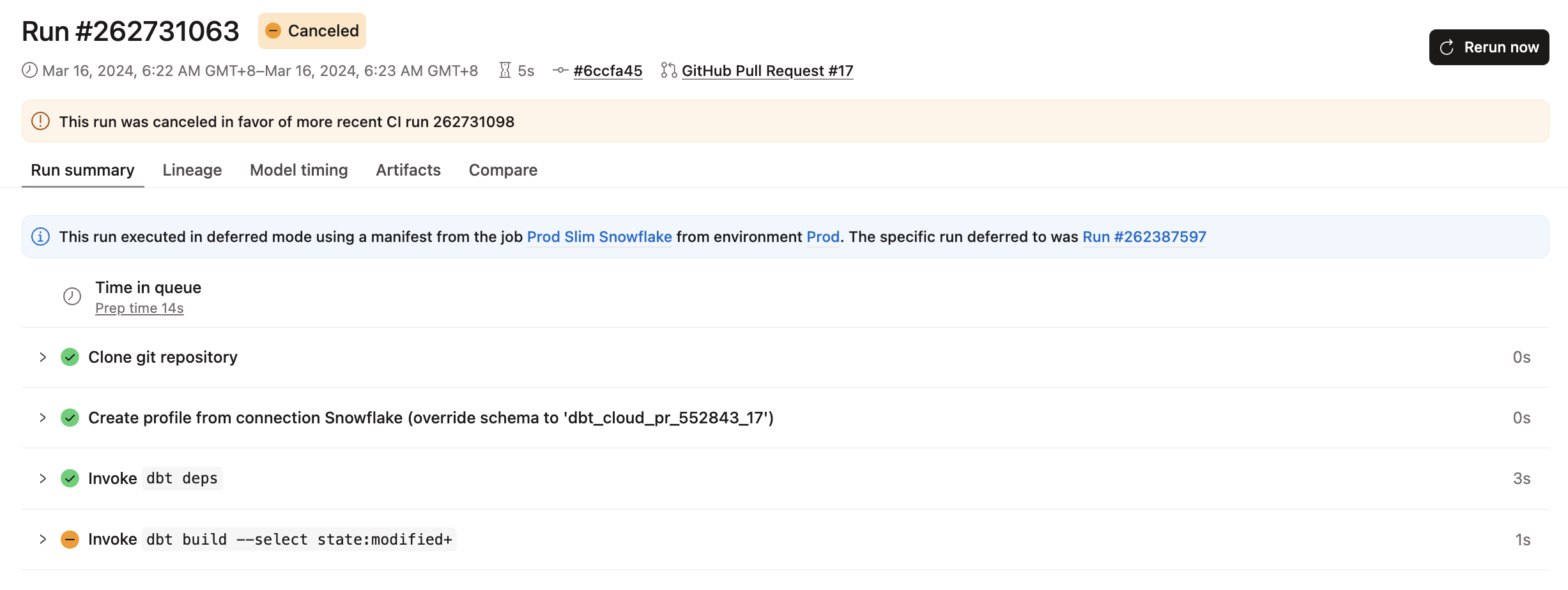

- Smart cancellation of stale builds — Automatically cancels stale, in-flight CI runs when there are new commits to the PR.

- Run slot treatment — CI runs don't consume a run slot.

- SQL linting — When enabled, automatically lints all SQL files in your project as a run step before your CI job builds.

Concurrent CI checks StarterEnterpriseEnterprise +

When you have teammates collaborating on the same dbt project creating pull requests on the same dbt repository, the same CI job will get triggered. Since each run builds into a dedicated, temporary schema that’s tied to the pull request, dbt can safely execute CI runs concurrently instead of sequentially (differing from what is done with deployment dbt jobs). Because no one needs to wait for one CI run to finish before another one can start, with concurrent CI checks, your whole team can test and integrate dbt code faster.

The following describes the conditions when CI checks are run concurrently and when they’re not:

- CI runs with different PR numbers execute concurrently.

- CI runs with the same PR number and different commit SHAs execute serially because they’re building into the same schema. dbt will run the latest commit and cancel any older, stale commits. For details, refer to Smart cancellation of stale builds.

- CI runs with the same PR number and same commit SHA, originating from different dbt projects will execute jobs concurrently. This can happen when two CI jobs are set up in different dbt projects that share the same dbt repository.

Smart cancellation of stale builds StarterEnterpriseEnterprise +

When you push a new commit to a PR, dbt enqueues a new CI run for the latest commit and cancels any CI run that is (now) stale and still in flight. This can happen when you’re pushing new commits while a CI build is still in process and not yet done. By cancelling runs in a safe and deliberate way, dbt helps improve productivity and reduce data platform spend on wasteful CI runs.

Run slot treatment StarterEnterpriseEnterprise +

CI runs don't consume run slots. This guarantees a CI check will never block a production run.

SQL linting StarterEnterpriseEnterprise +

Available on dbt release tracks and dbt Starter or Enterprise-tier accounts.

When enabled for your CI job, dbt invokes SQLFluff which is a modular and configurable SQL linter that warns you of complex functions, syntax, formatting, and compilation errors.

By default, SQL linting lints all the changed SQL files in your project (compared to the last deferred production state). Note that snapshots can be defined in YAML and .sql files, but its SQL isn't lintable and can cause errors during linting. To prevent SQLFluff from linting snapshot files, add the snapshots directory to your .sqlfluffignore file (for example snapshots/). Refer to snapshot linting for more information.

If the linter runs into errors, you can specify whether dbt should stop running the job on error or continue running it on error. When failing jobs, it helps reduce compute costs by avoiding builds for pull requests that don't meet your SQL code quality CI check.

To configure SQLFluff linting:

You can optionally configure SQLFluff linting rules to override default linting behavior.

- Use SQLFluff Configuration Files to override the default linting behavior in dbt.

- Create a

.sqlfluffconfiguration file in your project, add your linting rules to it, and dbt will use them when linting.- When configuring, you can use

dbtas the templater (for example,templater = dbt) - If you’re using the Studio IDE, dbt CLI, or any other editor, refer to Customize linting for guidance on how to add the dbt-specific (or dbtonic) linting rules we use for own project.

- When configuring, you can use

- For complete details, refer to Custom Usage in the SQLFluff documentation.

Was this page helpful?

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.