dbt Cloud now includes a suite of new features that enable configuring precise and unique connections to data platforms at the environment and user level. These enable more sophisticated setups, like connecting a project to multiple warehouse accounts, first-class support for staging environments, and user-level overrides for specific dbt versions. This gives dbt Cloud developers the features they need to tackle more complex tasks, like Write-Audit-Publish (WAP) workflows and safely testing dbt version upgrades. While you still configure a default connection at the project level and per-developer, you now have tools to get more advanced in a secure way. Soon, dbt Cloud will take this even further allowing multiple connections to be set globally and reused with global connections.

Developer Blog | dbt Developer Hub

Find tutorials, product updates, and developer insights in the dbt Developer Blog.

Start hereLLM-powered Analytics Engineering: How we're using AI inside of our dbt project, today, with no new tools.

Cloud Data Platforms make new things possible; dbt helps you put them into production

The original paradigm shift that enabled dbt to exist and be useful was databases going to the cloud.

All of a sudden it was possible for more people to do better data work as huge blockers became huge opportunities:

- We could now dynamically scale compute on-demand, without upgrading to a larger on-prem database.

- We could now store and query enormous datasets like clickstream data, without pre-aggregating and transforming it.

Today, the next wave of innovation is happening in AI and LLMs, and it's coming to the cloud data platforms dbt practitioners are already using every day. For one example, Snowflake have just released their Cortex functions to access LLM-powered tools tuned for running common tasks against your existing datasets. In doing so, there are a new set of opportunities available to us:

Column-Level Lineage, Model Performance, and Recommendations: ship trusted data products with dbt Explorer

What’s in a data platform?

Raising a dbt project is hard work. We, as data professionals, have poured ourselves into raising happy healthy data products, and we should be proud of the insights they’ve driven. It certainly wasn’t without its challenges though — we remember the terrible twos, where we worked hard to just get the platform to walk straight. We remember the angsty teenage years where tests kept failing, seemingly just to spite us. A lot of blood, sweat, and tears are shed in the service of clean data!

Once the project could dress and feed itself, we also worked hard to get buy-in from our colleagues who put their trust in our little project. Without deep trust and understanding of what we built, our colleagues who depend on your data (or even those involved in developing it with you — it takes a village after all!) are more likely to be in your DMs with questions than in their BI tools, generating insights.

When our teammates ask about where the data in their reports come from, how fresh it is, or about the right calculation for a metric, what a joy! This means they want to put what we’ve built to good use — the challenge is that, historically, it hasn’t been all that easy to answer these questions well. That has often meant a manual, painstaking process of cross checking run logs and your dbt documentation site to get the stakeholder the information they need.

Enter dbt Explorer! dbt Explorer centralizes documentation, lineage, and execution metadata to reduce the work required to ship trusted data products faster.

Serverless, free-tier data stack with dlt + dbt core.

The problem, the builder and tooling

The problem: My partner and I are considering buying a property in Portugal. There is no reference data for the real estate market here - how many houses are being sold, for what price? Nobody knows except the property office and maybe the banks, and they don’t readily divulge this information. The only data source we have is Idealista, which is a portal where real estate agencies post ads.

Unfortunately, there are significantly fewer properties than ads - it seems many real estate companies re-post the same ad that others do, with intentionally different data and often misleading bits of info. The real estate agencies do this so the interested parties reach out to them for clarification, and from there they can start a sales process. At the same time, the website with the ads is incentivised to allow this to continue as they get paid per ad, not per property.

The builder: I’m a data freelancer who deploys end to end solutions, so when I have a data problem, I cannot just let it go.

The tools: I want to be able to run my project on Google Cloud Functions due to the generous free tier. dlt is a new Python library for declarative data ingestion which I have wanted to test for some time. Finally, I will use dbt Core for transformation.

Deprecation of dbt Server

Summary

We’re announcing that dbt Server is officially deprecated and will no longer be maintained by dbt Labs going forward. You can continue to use the repository and fork it for your needs. We’re also looking for a maintainer of the repository from our community! If you’re interested, please reach out by opening an issue in the repository.

Why are we deprecating dbt Server?

At dbt Labs, we are continually working to build rich experiences that help our users scale collaboration around data. To achieve this vision, we need to take moments to think about which products are contributing to this goal, and sometimes make hard decisions about the ones that are not, so that we can focus on enhancing the most impactful ones.

dbt Server previously supported our legacy Semantic Layer, which was fully deprecated in December 2023. In October 2023, we introduced the GA of the revamped dbt Semantic Layer with significant improvements, made possible by the acquisition of Transform and the integration of MetricFlow into dbt.

The dbt Semantic Layer is now fully independent of dbt Server and operates on MetricFlow Server, a powerful new proprietary technology designed for enhanced scalability. We’re incredibly excited about the new updates and encourage you to check out our documentation, as well as this blog on how the product works.

The deprecation of dbt Server and updates to the Semantic Layer signify the evolution of the dbt ecosystem towards more focus on in product and out-of-the-box experiences around connectivity, scale, and flexibility. We are excited that you are along with us on this journey.

More time coding, less time waiting: Mastering defer in dbt

Picture this — you’ve got a massive dbt project, thousands of models chugging along, creating actionable insights for your stakeholders. A ticket comes your way — a model needs to be refactored! "No problem," you think to yourself, "I will simply make that change and test it locally!" You look at your lineage, and realize this model is many layers deep, buried underneath a long chain of tables and views.

“OK,” you think further, “I’ll just run a dbt build -s +my_changed_model to make sure I have everything I need built into my dev schema and I can test my changes”. You run the command. You wait. You wait some more. You get some coffee, and completely take yourself out of your dbt development flow state. A lot of time and money down the drain to get to a point where you can start your work. That’s no good!

Luckily, dbt’s defer functionality allow you to only build what you care about when you need it, and nothing more. This feature helps developers spend less time and money in development, helping ship trusted data products faster. dbt Cloud offers native support for this workflow in development, so you can start deferring without any additional overhead!

How to integrate with dbt

Overview

Over the course of my three years running the Partner Engineering team at dbt Labs, the most common question I've been asked is, How do we integrate with dbt? Because those conversations often start out at the same place, I decided to create this guide so I’m no longer the blocker to fundamental information. This also allows us to skip the intro and get to the fun conversations so much faster, like what a joint solution for our customers would look like.

This guide doesn't include how to integrate with dbt Core. If you’re interested in creating a dbt adapter, please check out the adapter development guide instead.

Instead, we're going to focus on integrating with dbt Cloud. Integrating with dbt Cloud is a key requirement to become a dbt Labs technology partner, opening the door to a variety of collaborative commercial opportunities.

Here I'll cover how to get started, potential use cases you want to solve for, and points of integrations to do so.

How we built consistent product launch metrics with the dbt Semantic Layer

There’s nothing quite like the feeling of launching a new product. On launch day emotions can range from excitement, to fear, to accomplishment all in the same hour. Once the dust settles and the product is in the wild, the next thing the team needs to do is track how the product is doing. How many users do we have? How is performance looking? What features are customers using? How often? Answering these questions is vital to understanding the success of any product launch.

At dbt we recently made the Semantic Layer Generally Available. The Semantic Layer lets teams define business metrics centrally, in dbt, and access them in multiple analytics tools through our semantic layer APIs. I’m a Product Manager on the Semantic Layer team, and the launch of the Semantic Layer put our team in an interesting, somewhat “meta,” position: we need to understand how a product launch is doing, and the product we just launched is designed to make defining and consuming metrics much more efficient. It’s the perfect opportunity to put the semantic layer through its paces for product analytics. This blog post walks through the end-to-end process we used to set up product analytics for the dbt Semantic Layer using the dbt Semantic Layer.

Why you should specify a production environment in dbt Cloud

You should split your Jobs across Environments in dbt Cloud based on their purposes (e.g. Production and Staging/CI) and set one environment as Production. This will improve your CI experience and enable you to use dbt Explorer.

Environmental segmentation has always been an important part of the analytics engineering workflow:

- When developing new models you can process a smaller subset of your data by using

target.nameor an environment variable. - By building your production-grade models into a different schema and database, you can experiment in peace without being worried that your changes will accidentally impact downstream users.

- Using dedicated credentials for production runs, instead of an analytics engineer's individual dev credentials, ensures that things don't break when that long-tenured employee finally hangs up their IDE.

Historically, dbt Cloud required a separate environment for Development, but was otherwise unopinionated in how you configured your account. This mostly just worked – as long as you didn't have anything more complex than a CI job mixed in with a couple of production jobs – because important constructs like deferral in CI and documentation were only ever tied to a single job.

But as companies' dbt deployments have grown more complex, it doesn't make sense to assume that a single job is enough anymore. We need to exchange a job-oriented strategy for a more mature and scalable environment-centric view of the world. To support this, a recent change in dbt Cloud enables project administrators to mark one of their environments as the Production environment, just as has long been possible for the Development environment.

Explicitly separating your Production workloads lets dbt Cloud be smarter with the metadata it creates, and is particularly important for two new features: dbt Explorer and the revised CI workflows.

To defer or to clone, that is the question

Hi all, I’m Kshitij, a senior software engineer on the Core team at dbt Labs.

One of the coolest moments of my career here thus far has been shipping the new dbt clone command as part of the dbt-core v1.6 release.

However, one of the questions I’ve received most frequently is guidance around “when” to clone that goes beyond the documentation on “how” to clone. In this blog post, I’ll attempt to provide this guidance by answering these FAQs:

- What is

dbt clone? - How is it different from deferral?

- Should I defer or should I clone?

Optimizing Materialized Views with dbt

This blog post was updated on December 18, 2023 to cover the support of MVs on dbt-bigquery and updates on how to test MVs.

Introduction

The year was 2020. I was a kitten-only household, and dbt Labs was still Fishtown Analytics. A enterprise customer I was working with, Jetblue, asked me for help running their dbt models every 2 minutes to meet a 5 minute SLA.

After getting over the initial terror, we talked through the use case and soon realized there was a better option. Together with my team, I created lambda views to meet the need.

Flash forward to 2023. I’m writing this as my giant dog snores next to me (don’t worry the cats have multiplied as well). Jetblue has outgrown lambda views due to performance constraints (a view can only be so performant) and we are at another milestone in dbt’s journey to support streaming. What. a. time.

Today we are announcing that we now support Materialized Views in dbt. So, what does that mean?

Create dbt Documentation and Tests 10x faster with ChatGPT

Whether you are creating your pipelines into dbt for the first time or just adding a new model once in a while, good documentation and testing should always be a priority for you and your team. Why do we avoid it like the plague then? Because it’s a hassle having to write down each individual field, its description in layman terms and figure out what tests should be performed to ensure the data is fine and dandy. How can we make this process faster and less painful?

By now, everyone knows the wonders of the GPT models for code generation and pair programming so this shouldn’t come as a surprise. But ChatGPT really shines at inferring the context of verbosely named fields from database table schemas. So in this post I am going to help you 10x your documentation and testing speed by using ChatGPT to do most of the leg work for you.

Data Vault 2.0 with dbt Cloud

Data Vault 2.0 is a data modeling technique designed to help scale large data warehousing projects. It is a rigid, prescriptive system detailed vigorously in a book that has become the bible for this technique.

So why Data Vault? Have you experienced a data warehousing project with 50+ data sources, with 25+ data developers working on the same data platform, or data spanning 5+ years with two or more generations of source systems? If not, it might be hard to initially understand the benefits of Data Vault, and maybe Kimball modelling is better for you. But if you are in any of the situations listed, then this is the article for you!

Building a historical user segmentation model with dbt

Introduction

Most data modeling approaches for customer segmentation are based on a wide table with user attributes. This table only stores the current attributes for each user, and is then loaded into the various SaaS platforms via Reverse ETL tools.

Take for example a Customer Experience (CX) team that uses Salesforce as a CRM. The users will create tickets to ask for assistance, and the CX team will start attending them in the order that they are created. This is a good first approach, but not a data driven one.

An improvement to this would be to prioritize the tickets based on the customer segment, answering our most valuable customers first. An Analytics Engineer can build a segmentation to identify the power users (for example with an RFM approach) and store it in the data warehouse. The Data Engineering team can then export that user attribute to the CRM, allowing the customer experience team to build rules on top of it.

Accelerate your documentation workflow: Generate docs for whole folders at once

At Lunar, most of our dbt models are sourcing from event-driven architecture. As an example, we have the following models for our activity_based_interest folder in our ingestion layer:

activity_based_interest_activated.sqlactivity_based_interest_deactivated.sqlactivity_based_interest_updated.sqldowngrade_interest_level_for_user.sqlset_inactive_interest_rate_after_july_1st_in_bec_for_user.sqlset_inactive_interest_rate_from_july_1st_in_bec_for_user.sqlset_interest_levels_from_june_1st_in_bec_for_user.sql

This results in a lot of the same columns (e.g. account_id) existing in different models, across different layers. This means I end up:

- Writing/copy-pasting the same documentation over and over again

- Halfway through, realizing I could improve the wording to make it easier to understand, and go back and update the

.ymlfiles I already did - Realizing I made a syntax error in my

.ymlfile, so I go back and fix it - Realizing the columns are defined differently with different wording being used in other folders in our dbt project

- Reconsidering my choice of career and pray that a large language model will steal my job

- Considering if there’s a better way to be generating documentation used across different models

Modeling ragged time-varying hierarchies

This article covers an approach to handling time-varying ragged hierarchies in a dimensional model. These kinds of data structures are commonly found in manufacturing, where components of a product have both parents and children of arbitrary depth and those components may be replaced over the product's lifetime. The strategy described here simplifies many common types of analytical and reporting queries.

To help visualize this data, we're going to pretend we are a company that manufactures and rents out eBikes in a ride share application. When we build a bike, we keep track of the serial numbers of the components that make up the bike. Any time something breaks and needs to be replaced, we track the old parts that were removed and the new parts that were installed. We also precisely track the mileage accumulated on each of our bikes. Our primary analytical goal is to be able to report on the expected lifetime of each component, so we can prioritize improving that component and reduce costly maintenance.

Data engineers + dbt v1.5: Evolving the craft for scale

I, Sung, entered the data industry by chance in Fall 2014. I was using this thing called audit command language (ACL) to automate debits equal credits for accounting analytics (yes, it’s as tedious as it sounds). I remember working my butt off in a hotel room in Des Moines, Iowa where the most interesting thing there was a Panda Express. It was late in the AM. I’m thinking about 2 am. And I took a step back and thought to myself, “Why am I working so hard for something that I just don’t care about with tools that hurt more than help?”

Why we're deprecating the dbt_metrics package

Hello, my dear data people.

If you haven’t read Nick & Roxi’s blog post about what’s coming in the future of the dbt Semantic Layer, I highly recommend you read through that, as it gives helpful context around what the future holds.

With that said, it has come time for us to bid adieu to our beloved dbt_metrics package. Upon the release of dbt-core v1.6 in late July, we will be deprecating support for the dbt_metrics package.

How we reduced a 6-hour runtime in Alteryx to 9 minutes with dbt and Snowflake

Alteryx is a visual data transformation platform with a user-friendly interface and drag-and-drop tools. Nonetheless, Alteryx may have difficulties to cope with the complexity increase within an organization’s data pipeline, and it can become a suboptimal tool when companies start dealing with large and complex data transformations. In such cases, moving to dbt can be a natural step, since dbt is designed to manage complex data transformation pipelines in a scalable, efficient, and more explicit manner. Also, this transition involved migrating from on-premises SQL Server to Snowflake cloud computing. In this article, we describe the differences between Alteryx and dbt, and how we reduced a client's 6-hour runtime in Alteryx to 9 minutes with dbt and Snowflake at Indicium Tech.

Building a Kimball dimensional model with dbt

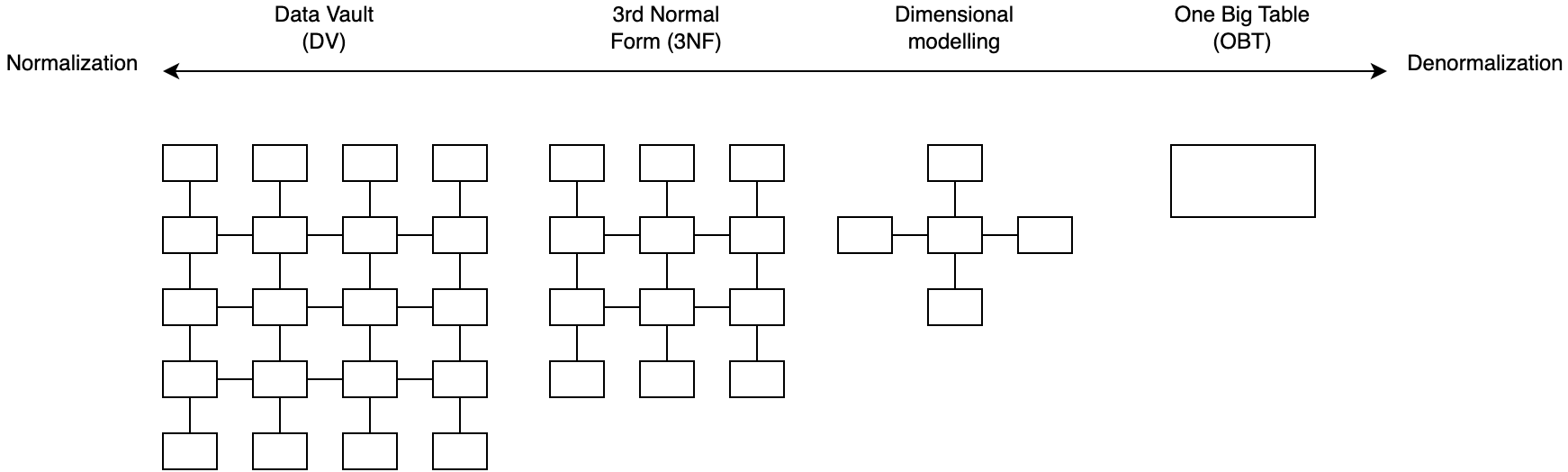

While the relevance of dimensional modeling has been debated by data practitioners, it is still one of the most widely adopted data modeling technique for analytics.

Despite its popularity, resources on how to create dimensional models using dbt remain scarce and lack detail. This tutorial aims to solve this by providing the definitive guide to dimensional modeling with dbt.

By the end of this tutorial, you will:

- Understand dimensional modeling concepts

- Set up a mock dbt project and database

- Identify the business process to model

- Identify the fact and dimension tables

- Create the dimension tables

- Create the fact table

- Document the dimensional model relationships

- Consume the dimensional model