Apache Spark configurations

dbt-databricksIf you're using Databricks, the dbt-databricks adapter is recommended over dbt-spark. If you're still using dbt-spark with Databricks consider migrating from the dbt-spark adapter to the dbt-databricks adapter.

For the Databricks version of this page, refer to Databricks setup.

See Databricks configuration for the Databricks version of this page.

Configuring tables

When materializing a model as table, you may include several optional configs that are specific to the dbt-spark plugin, in addition to the standard model configs.

| Option | Description | Required? | Example |

|---|---|---|---|

| file_format | The file format to use when creating tables (parquet, delta, iceberg, hudi, csv, json, text, jdbc, orc, hive or libsvm). | Optional | parquet |

| location_root | The created table uses the specified directory to store its data. The table alias is appended to it. | Optional | /mnt/root |

| partition_by | Partition the created table by the specified columns. A directory is created for each partition. | Optional | date_day |

| clustered_by | Each partition in the created table will be split into a fixed number of buckets by the specified columns. | Optional | country_code |

| buckets | The number of buckets to create while clustering | Required if clustered_by is specified | 8 |

Incremental models

dbt seeks to offer useful, intuitive modeling abstractions by means of its built-in configurations and materializations. Because there is so much variance between Apache Spark clusters out in the world—not to mention the powerful features offered to Databricks users by the Delta file format and custom runtime—making sense of all the available options is an undertaking in its own right.

Alternatively, you can use Apache Iceberg or Apache Hudi file format with Apache Spark runtime for building incremental models.

For that reason, the dbt-spark plugin leans heavily on the incremental_strategy config. This config tells the incremental materialization how to build models in runs beyond their first. It can be set to one of three values:

append(default): Insert new records without updating or overwriting any existing data.insert_overwrite: Ifpartition_byis specified, overwrite partitions in the table with new data. If nopartition_byis specified, overwrite the entire table with new data.merge(Delta, Iceberg and Hudi file format only): Match records based on aunique_key; update old records, insert new ones. (If nounique_keyis specified, all new data is inserted, similar toappend.)

Each of these strategies has its pros and cons, which we'll discuss below. As with any model config, incremental_strategy may be specified in dbt_project.yml or within a model file's config() block.

The append strategy

Following the append strategy, dbt will perform an insert into statement with all new data. The appeal of this strategy is that it is straightforward and functional across all platforms, file types, connection methods, and Apache Spark versions. However, this strategy cannot update, overwrite, or delete existing data, so it is likely to insert duplicate records for many data sources.

Specifying append as the incremental strategy is optional, since it's the default strategy used when none is specified.

- Source code

- Run code

{{ config(

materialized='incremental',

incremental_strategy='append',

) }}

-- All rows returned by this query will be appended to the existing table

select * from {{ ref('events') }}

{% if is_incremental() %}

where event_ts > (select max(event_ts) from {{ this }})

{% endif %}

create temporary view spark_incremental__dbt_tmp as

select * from analytics.events

where event_ts >= (select max(event_ts) from {{ this }})

;

insert into table analytics.spark_incremental

select `date_day`, `users` from spark_incremental__dbt_tmp

The insert_overwrite strategy

This strategy is most effective when specified alongside a partition_by clause in your model config. dbt will run an atomic insert overwrite statement that dynamically replaces all partitions included in your query. Be sure to re-select all of the relevant data for a partition when using this incremental strategy.

If no partition_by is specified, then the insert_overwrite strategy will atomically replace all contents of the table, overriding all existing data with only the new records. The column schema of the table remains the same, however. This can be desirable in some limited circumstances, since it minimizes downtime while the table contents are overwritten. The operation is comparable to running truncate + insert on other databases. For atomic replacement of Delta-formatted tables, use the table materialization (which runs create or replace) instead.

Usage notes:

- This strategy is not supported for tables with

file_format: delta. - This strategy is not available when connecting via Databricks SQL endpoints (

method: odbc+endpoint). - If connecting via a Databricks cluster + ODBC driver (

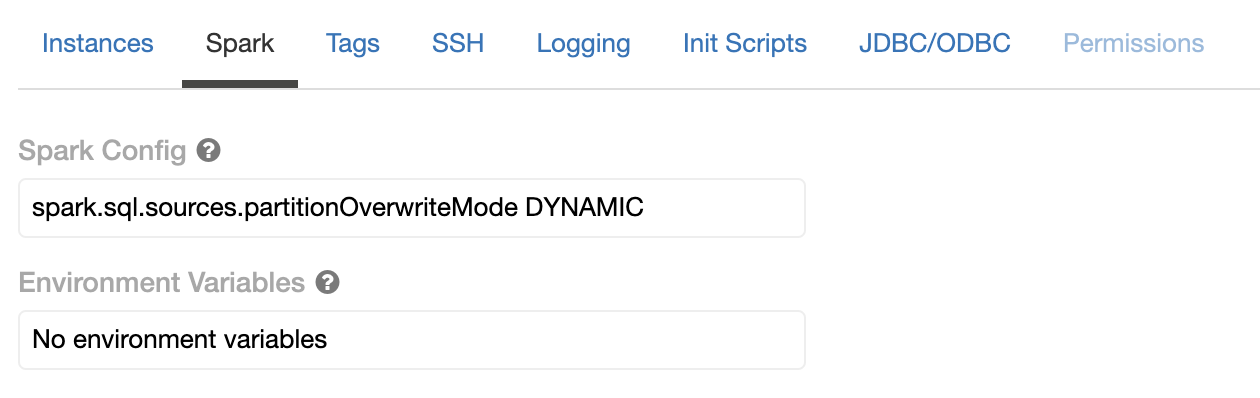

method: odbc+cluster), you must includeset spark.sql.sources.partitionOverwriteMode DYNAMICin the cluster Spark Config in order for dynamic partition replacement to work (incremental_strategy: insert_overwrite+partition_by).

- Source code

- Run code

{{ config(

materialized='incremental',

partition_by=['date_day'],

file_format='parquet'

) }}

/*

Every partition returned by this query will be overwritten

when this model runs

*/

with new_events as (

select * from {{ ref('events') }}

{% if is_incremental() %}

where date_day >= date_add(current_date, -1)

{% endif %}

)

select

date_day,

count(*) as users

from events

group by 1

create temporary view spark_incremental__dbt_tmp as

with new_events as (

select * from analytics.events

where date_day >= date_add(current_date, -1)

)

select

date_day,

count(*) as users

from events

group by 1

;

insert overwrite table analytics.spark_incremental

partition (date_day)

select `date_day`, `users` from spark_incremental__dbt_tmp

The merge strategy

Usage notes: The merge incremental strategy requires:

file_format: delta, iceberg or hudi- Databricks Runtime 5.1 and above for delta file format

- Apache Spark for Iceberg or Hudi file format

dbt will run an atomic merge statement which looks nearly identical to the default merge behavior on Snowflake and BigQuery. If a unique_key is specified (recommended), dbt will update old records with values from new records that match on the key column. If a unique_key is not specified, dbt will forgo match criteria and simply insert all new records (similar to append strategy).

- Source code

- Run code

{{ config(

materialized='incremental',

file_format='delta', # or 'iceberg' or 'hudi'

unique_key='user_id',

incremental_strategy='merge'

) }}

with new_events as (

select * from {{ ref('events') }}

{% if is_incremental() %}

where date_day >= date_add(current_date, -1)

{% endif %}

)

select

user_id,

max(date_day) as last_seen

from events

group by 1

create temporary view merge_incremental__dbt_tmp as

with new_events as (

select * from analytics.events

where date_day >= date_add(current_date, -1)

)

select

user_id,

max(date_day) as last_seen

from events

group by 1

;

merge into analytics.merge_incremental as DBT_INTERNAL_DEST

using merge_incremental__dbt_tmp as DBT_INTERNAL_SOURCE

on DBT_INTERNAL_SOURCE.user_id = DBT_INTERNAL_DEST.user_id

when matched then update set *

when not matched then insert *

Persisting model descriptions

Relation-level docs persistence is supported in dbt v0.17.0. For more information on configuring docs persistence, see the docs.

When the persist_docs option is configured appropriately, you'll be able to

see model descriptions in the Comment field of describe [table] extended

or show table extended in [database] like '*'.

Always schema, never database

Apache Spark uses the terms "schema" and "database" interchangeably. dbt understands

database to exist at a higher level than schema. As such, you should never

use or set database as a node config or in the target profile when running dbt-spark.

If you want to control the schema/database in which dbt will materialize models,

use the schema config and generate_schema_name macro only.

Default file format configurations

To access advanced incremental strategies features, such as

snapshots and the merge incremental strategy, you will want to

use the Delta, Iceberg or Hudi file format as the default file format when materializing models as tables.

It's quite convenient to do this by setting a top-level configuration in your project file:

models:

+file_format: delta # or iceberg or hudi

seeds:

+file_format: delta # or iceberg or hudi

snapshots:

+file_format: delta # or iceberg or hudi