Quickstart for dbt and Redshift

Introduction

In this quickstart guide, you'll learn how to use dbt with Redshift. It will show you how to:

- Set up a Redshift cluster.

- Load sample data into your Redshift account.

- Connect dbt to Redshift.

- Take a sample query and turn it into a model in your dbt project. A model in dbt is a select statement.

- Add tests to your models.

- Document your models.

- Schedule a job to run.

Check out dbt Fundamentals for free if you're interested in course learning with videos.

Prerequisites

- You have a dbt account.

- You have an AWS account with permissions to execute a CloudFormation template to create appropriate roles and a Redshift cluster.

Related content

- Learn more with dbt Learn courses

- CI jobs

- Deploy jobs

- Job notifications

- Source freshness

Create a Redshift cluster

- Sign in to your AWS account as a root user or an IAM user depending on your level of access.

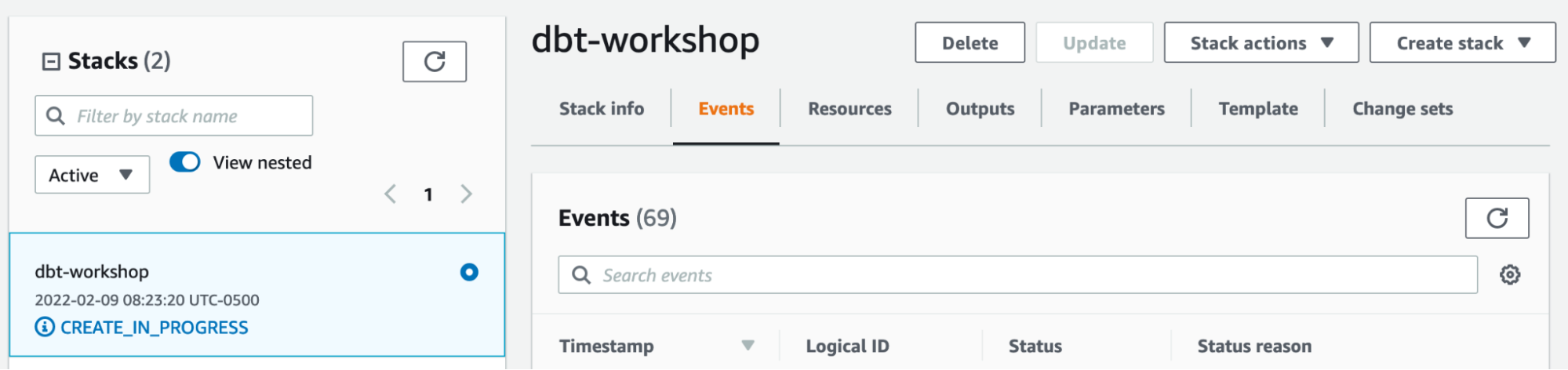

- Use a CloudFormation template to quickly set up a Redshift cluster. A CloudFormation template is a configuration file that automatically spins up the necessary resources in AWS. Start a CloudFormation stack and you can refer to the create-dbtworkshop-infr JSON file for more template details.

To avoid connectivity issues with dbt, make sure to allow inbound traffic on port 5439 from dbt's IP addresses in your Redshift security groups and Network Access Control Lists (NACLs) settings.

-

Click Next for each page until you reach the Select acknowledgement checkbox. Select I acknowledge that AWS CloudFormation might create IAM resources with custom names and click Create Stack. You should land on the stack page with a CREATE_IN_PROGRESS status.

-

When the stack status changes to CREATE_COMPLETE, click the Outputs tab on the top to view information that you will use throughout the rest of this guide. Save those credentials for later by keeping this open in a tab.

-

Type

Redshiftin the search bar at the top and click Amazon Redshift. -

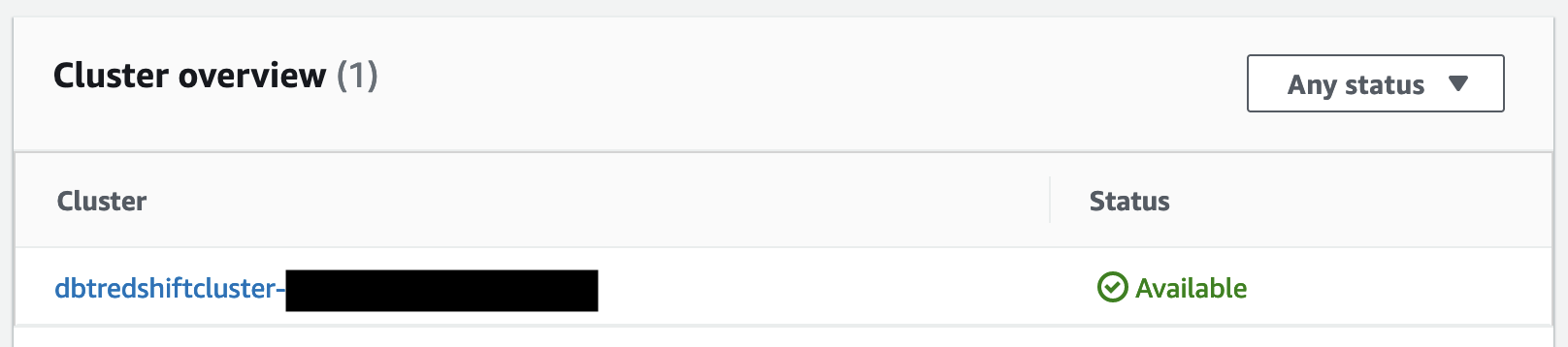

Confirm that your new Redshift cluster is listed in Cluster overview. Select your new cluster. The cluster name should begin with

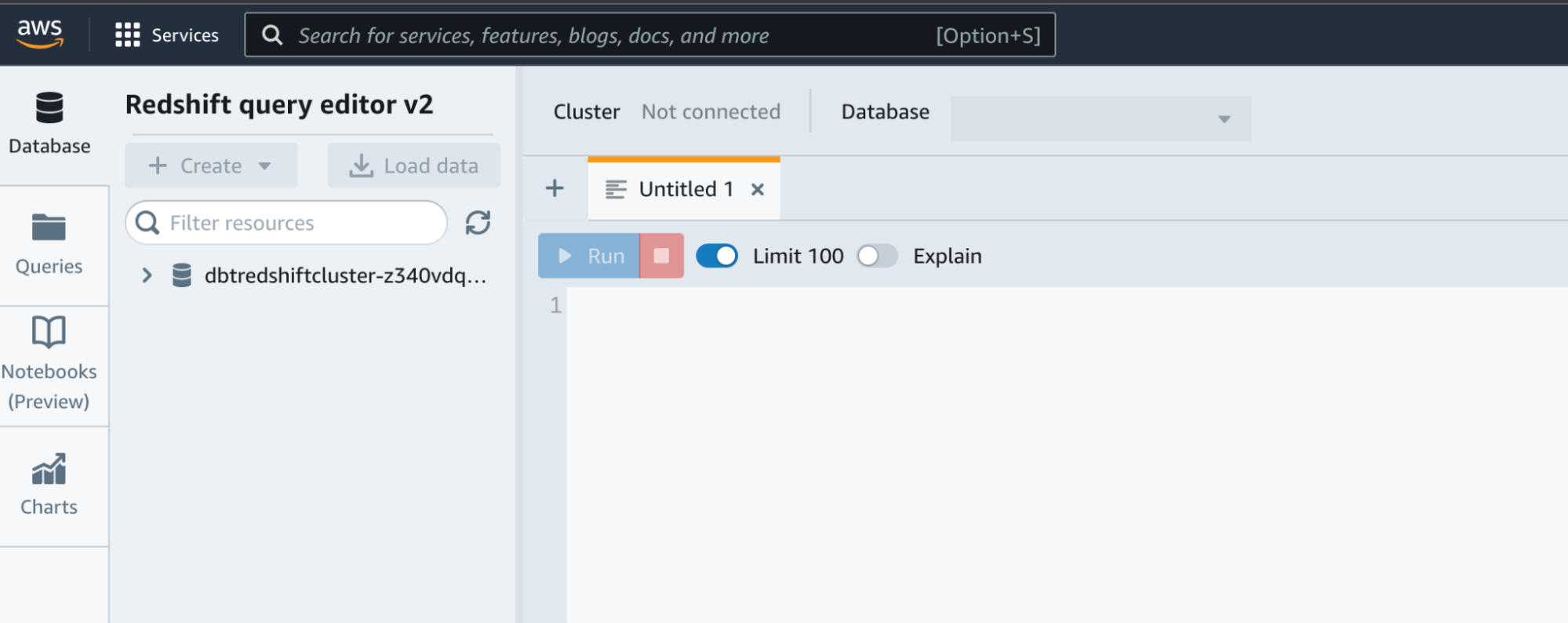

dbtredshiftcluster-. Then, click Query Data. You can choose the classic query editor or v2. We will be using the v2 version for the purpose of this guide.

-

You might be asked to Configure account. For this sandbox environment, we recommend selecting “Configure account”.

-

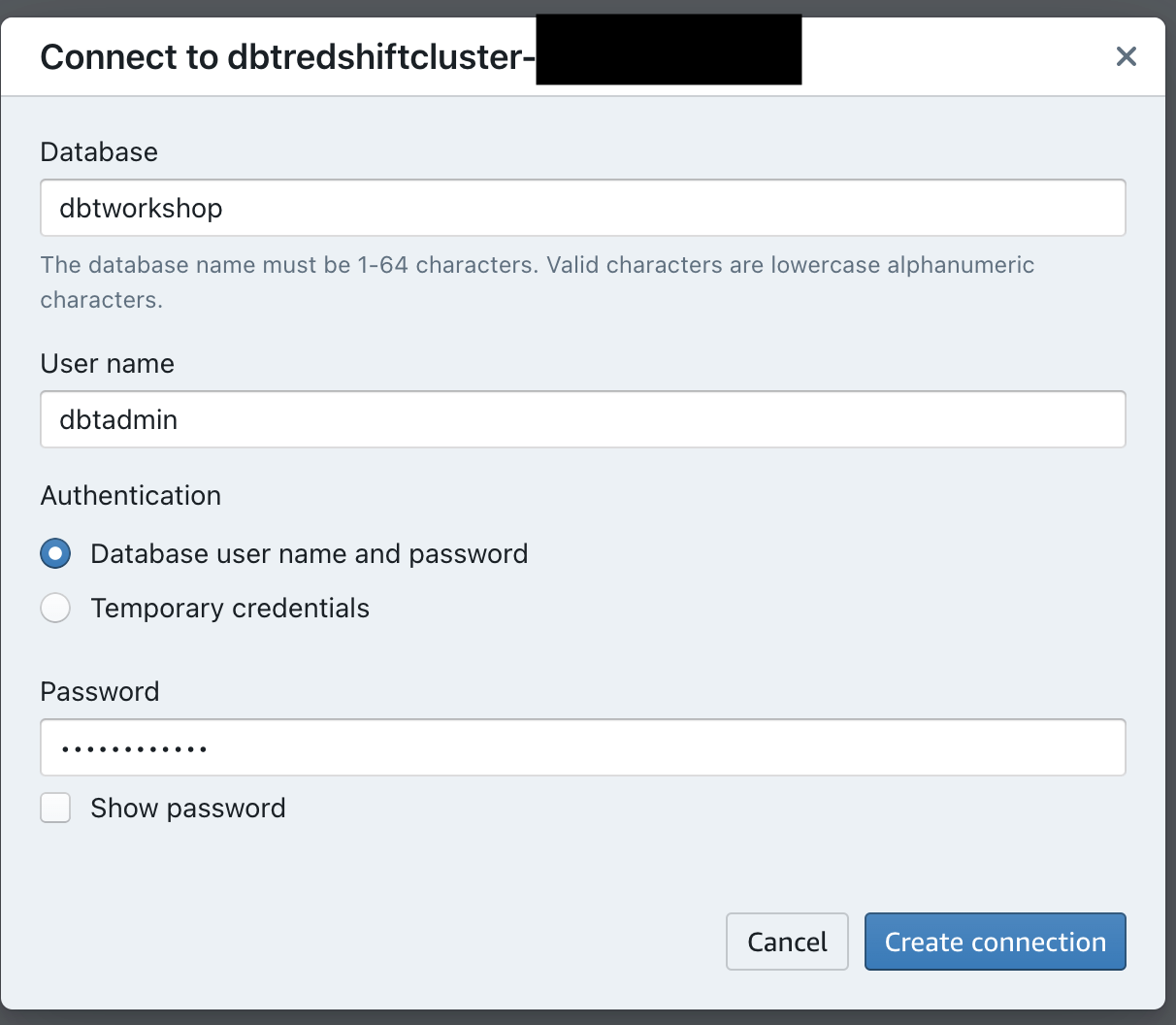

Select your cluster from the list. In the Connect to popup, fill out the credentials from the output of the stack:

- Authentication — Use the default which is Database user name and password.

- Database —

dbtworkshop - User name —

dbtadmin - Password — Use the autogenerated

RSadminpasswordfrom the output of the stack and save it for later.

- Click Create connection.

Load data

Now we are going to load our sample data into the S3 bucket that our Cloudformation template created. S3 buckets are simple and inexpensive way to store data outside of Redshift.

-

The data used in this course is stored as CSVs in a public S3 bucket. You can use the following URLs to download these files. Download these to your computer to use in the following steps.

-

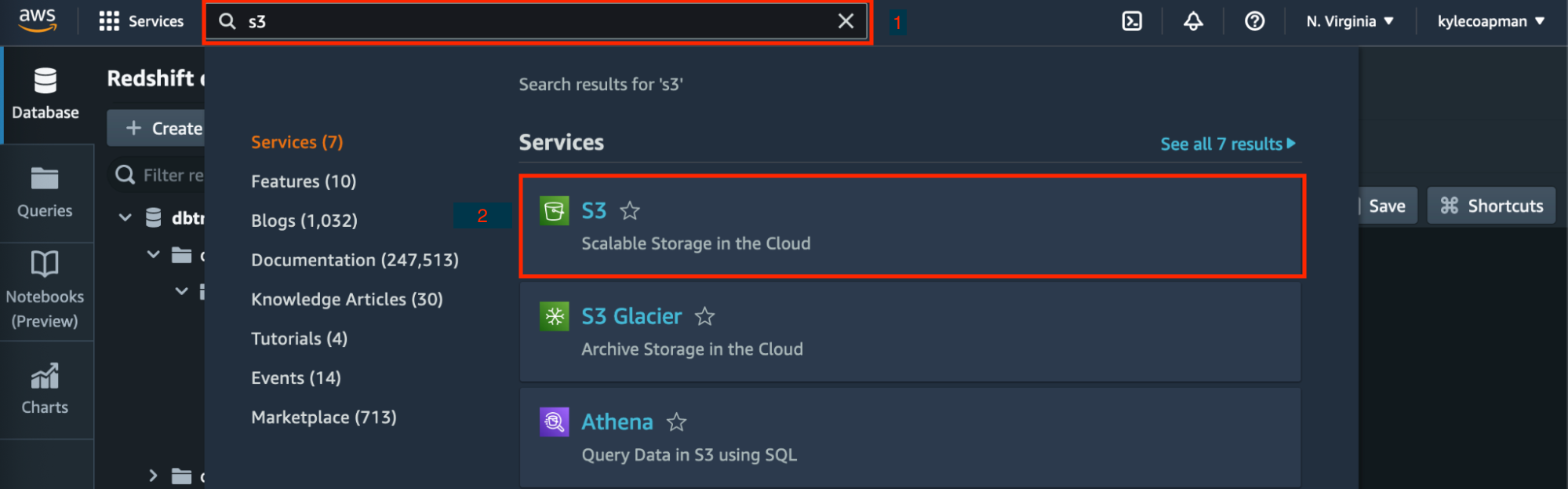

Now we are going to use the S3 bucket that you created with CloudFormation and upload the files. Go to the search bar at the top and type in

S3and click on S3. There will be sample data in the bucket already, feel free to ignore it or use it for other modeling exploration. The bucket will be prefixed withdbt-data-lake.

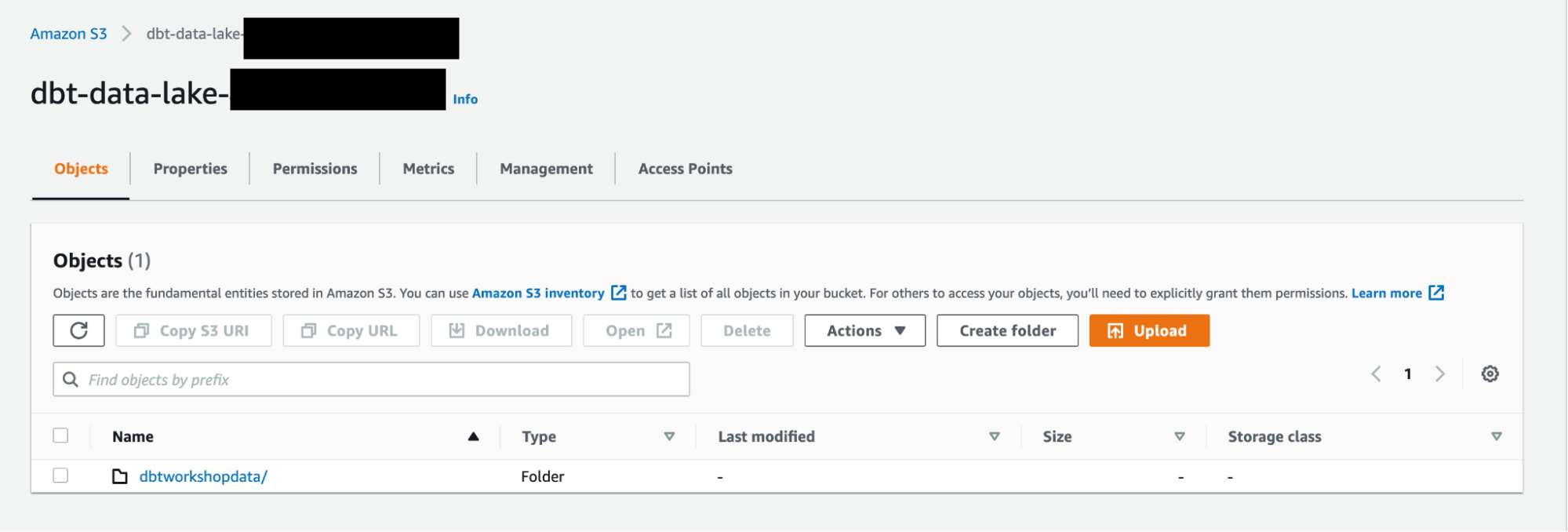

- Click on the

name of the bucketS3 bucket. If you have multiple S3 buckets, this will be the bucket that was listed under “Workshopbucket” on the Outputs page.

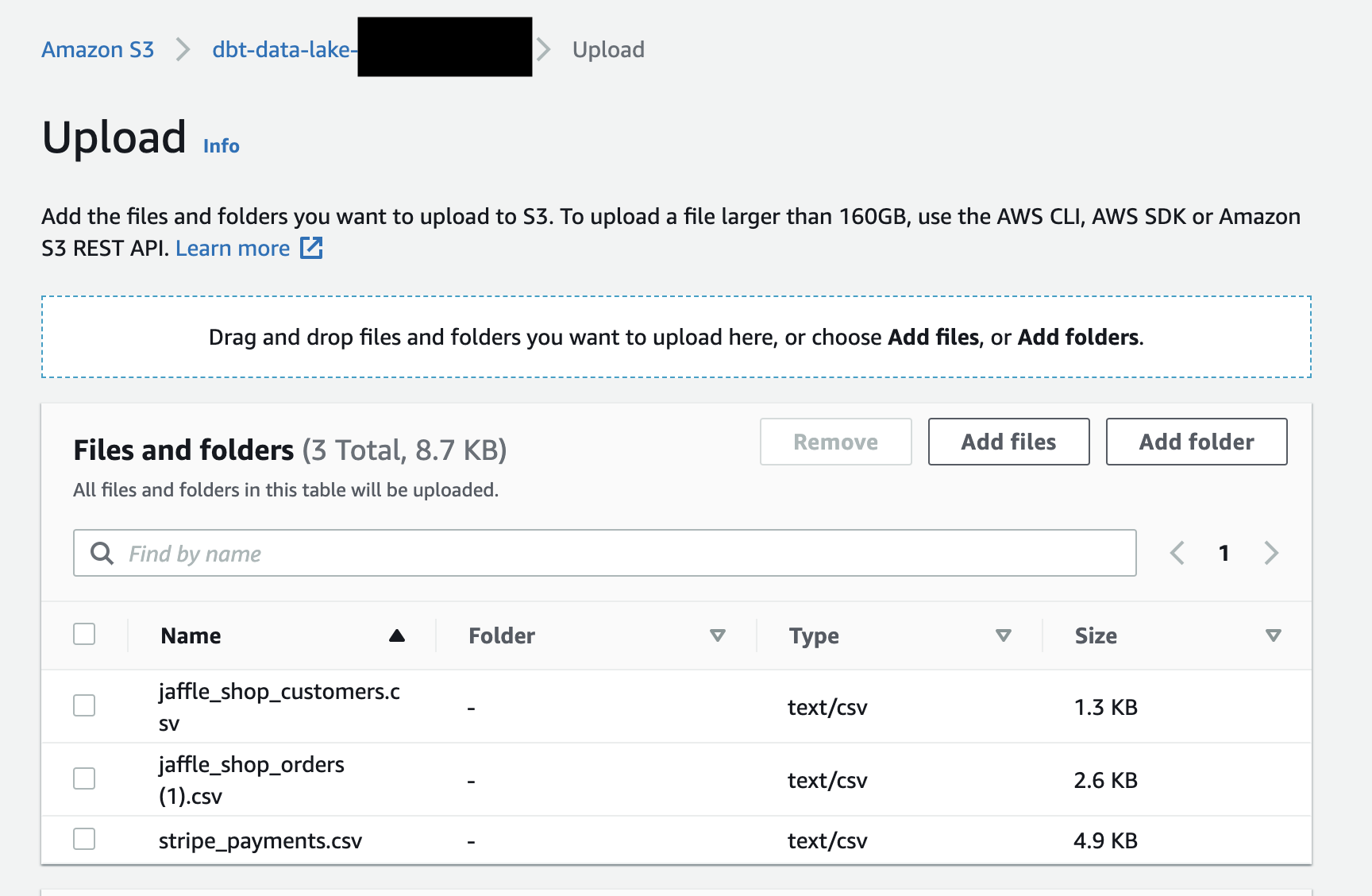

- Click Upload. Drag the three files into the UI and click the Upload button.

-

Remember the name of the S3 bucket for later. It should look like this:

s3://dbt-data-lake-xxxx. You will need it for the next section. -

Now let’s go back to the Redshift query editor. Search for Redshift in the search bar, choose your cluster, and select Query data.

-

In your query editor, execute this query below to create the schemas that we will be placing your raw data into. You can highlight the statement and then click on Run to run them individually. If you are on the Classic Query Editor, you might need to input them separately into the UI. You should see these schemas listed under

dbtworkshop.create schema if not exists jaffle_shop;

create schema if not exists stripe; -

Now create the tables in your schema with these queries using the statements below. These will be populated as tables in the respective schemas.

create table jaffle_shop.customers(

id integer,

first_name varchar(50),

last_name varchar(50)

);

create table jaffle_shop.orders(

id integer,

user_id integer,

order_date date,

status varchar(50)

);

create table stripe.payment(

id integer,

orderid integer,

paymentmethod varchar(50),

status varchar(50),

amount integer,

created date

); -

Now we need to copy the data from S3. This enables you to run queries in this guide for demonstrative purposes; it's not an example of how you would do this for a real project. Make sure to update the S3 location, iam role, and region. You can find the S3 and iam role in your outputs from the CloudFormation stack. Find the stack by searching for

CloudFormationin the search bar, then clicking Stacks in the CloudFormation tile.copy jaffle_shop.customers( id, first_name, last_name)

from 's3://dbt-data-lake-xxxx/jaffle_shop_customers.csv'

iam_role 'arn:aws:iam::XXXXXXXXXX:role/RoleName'

region 'us-east-1'

delimiter ','

ignoreheader 1

acceptinvchars;

copy jaffle_shop.orders(id, user_id, order_date, status)

from 's3://dbt-data-lake-xxxx/jaffle_shop_orders.csv'

iam_role 'arn:aws:iam::XXXXXXXXXX:role/RoleName'

region 'us-east-1'

delimiter ','

ignoreheader 1

acceptinvchars;

copy stripe.payment(id, orderid, paymentmethod, status, amount, created)

from 's3://dbt-data-lake-xxxx/stripe_payments.csv'

iam_role 'arn:aws:iam::XXXXXXXXXX:role/RoleName'

region 'us-east-1'

delimiter ','

ignoreheader 1

Acceptinvchars;Ensure that you can run a

select *from each of the tables with the following code snippets.select * from jaffle_shop.customers;

select * from jaffle_shop.orders;

select * from stripe.payment;

Connect dbt to Redshift

-

Create a new project in dbt. Navigate to Account settings (by clicking on your account name in the left side menu), and click + New Project.

-

Enter a project name and click Continue.

-

In the Configure your development environment section, click the Connection dropdown menu and select Add new connection. This directs you to the connection configuration settings.

-

In the Type section, select Redshift.

-

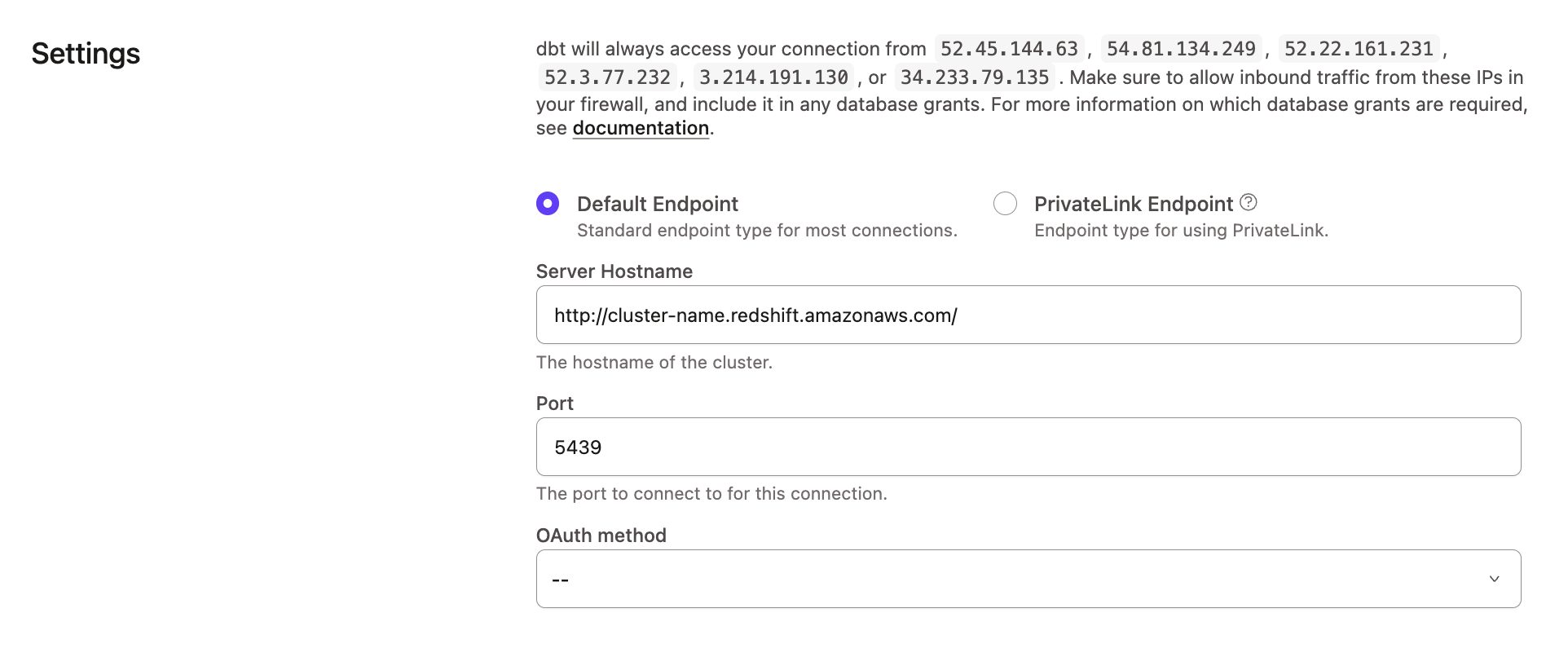

Enter your Redshift settings. Reference your credentials you saved from the CloudFormation template.

- Hostname — Your entire hostname.

- Port —

5439 - Database (under Optional settings) —

dbtworkshop

Avoid connection issuesTo avoid connection issues with dbt, ensure you follow these minimal but essential AWS network setup steps because Redshift network access isn't configured automatically:

-

Allow inbound traffic on port

5439from dbt's IP addresses in your Redshift security groups and Network Access Control Lists settings. -

Configure your Virtual Private Cloud with the necessary route tables, IP gateways (like an internet or NAT gateway), and inbound rules.

For more information, see AWS documentation on configuring Redshift security group communication.

-

Click Save.

-

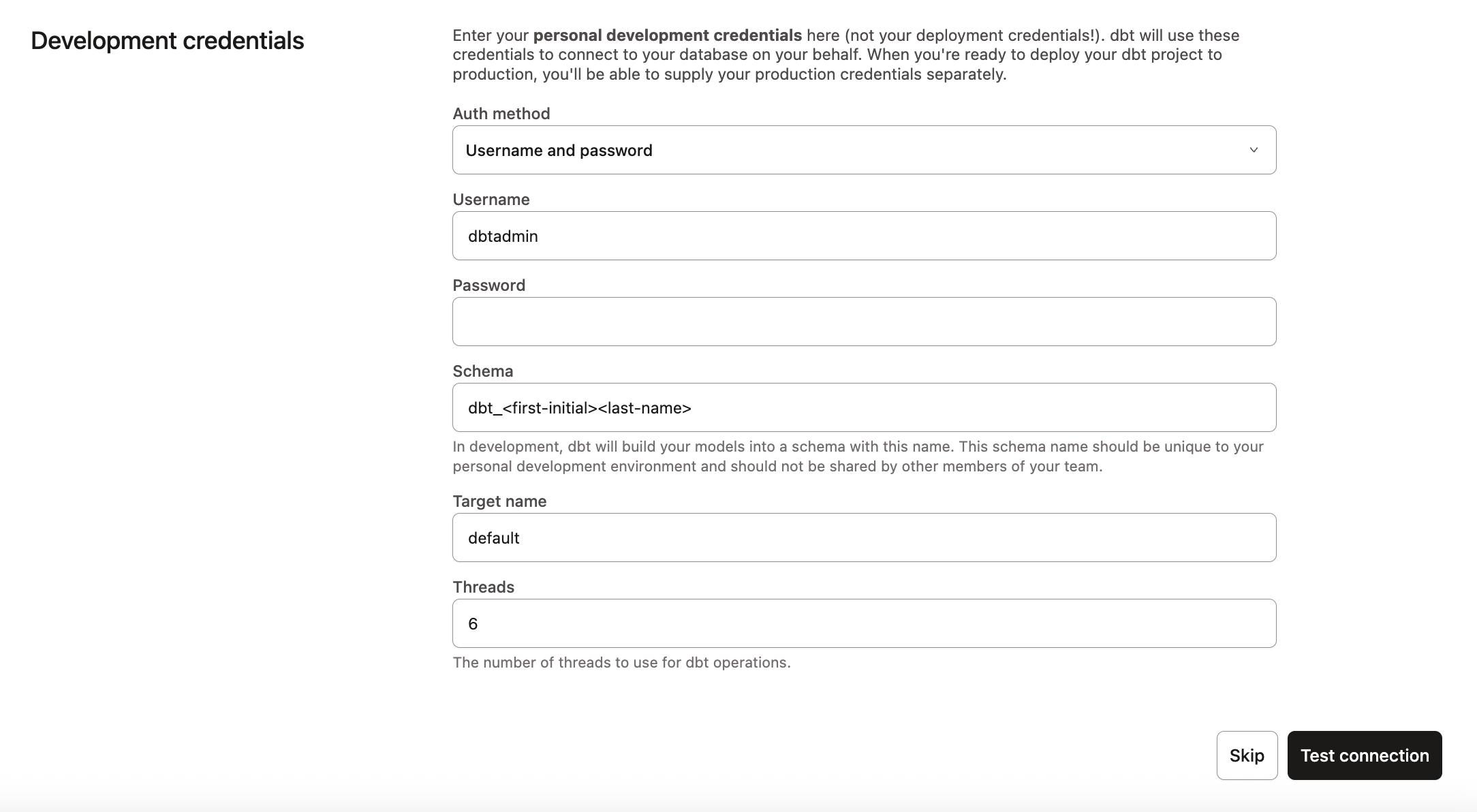

Set up your personal development credentials by going to Your profile > Credentials.

-

Select your project that uses the Redshift connection.

-

Click the configure your development environment and add a connection link. This directs you to a page where you can enter your personal development credentials.

-

Set your development credentials. These credentials will be used by dbt to connect to Redshift. Those credentials (as provided in your CloudFormation output) will be:

- Username —

dbtadmin - Password — This is the autogenerated password that you used earlier in the guide

- Schema — dbt automatically generates a schema name for you. By convention, this is

dbt_<first-initial><last-name>. This is the schema connected directly to your development environment, and it's where your models will be built when running dbt within the Studio IDE.

- Username —

-

Click Test connection. This verifies that dbt can access your Redshift cluster.

-

If the test succeeded, click Save to complete the configuration. If it failed, you might need to check your Redshift settings and credentials.

Set up a dbt managed repository

When you develop in dbt, you can leverage Git to version control your code.

To connect to a repository, you can either set up a dbt-hosted managed repository or directly connect to a supported git provider. Managed repositories are a great way to trial dbt without needing to create a new repository. In the long run, it's better to connect to a supported git provider to use features like automation and continuous integration.

To set up a managed repository:

- Under "Setup a repository", select Managed.

- Type a name for your repo such as

bbaggins-dbt-quickstart - Click Create. It will take a few seconds for your repository to be created and imported.

- Once you see the "Successfully imported repository," click Continue.

Initialize your dbt project and start developing

Now that you have a repository configured, you can initialize your project and start development in dbt:

- Click Start developing in the Studio IDE. It might take a few minutes for your project to spin up for the first time as it establishes your git connection, clones your repo, and tests the connection to the warehouse.

- Above the file tree to the left, click Initialize dbt project. This builds out your folder structure with example models.

- Make your initial commit by clicking Commit and sync. Use the commit message

initial commitand click Commit. This creates the first commit to your managed repo and allows you to open a branch where you can add new dbt code. - You can now directly query data from your warehouse and execute

dbt run. You can try this out now:- Click + Create new file, add this query to the new file, and click Save as to save the new file:

select * from jaffle_shop.customers - In the command line bar at the bottom, enter

dbt runand click Enter. You should see adbt run succeededmessage.

- Click + Create new file, add this query to the new file, and click Save as to save the new file:

Build your first model

You have two options for working with files in the Studio IDE:

- Create a new branch (recommended) — Create a new branch to edit and commit your changes. Navigate to Version Control on the left sidebar and click Create branch.

- Edit in the protected primary branch — If you prefer to edit, format, or lint files and execute dbt commands directly in your primary git branch. The Studio IDE prevents commits to the protected branch, so you will be prompted to commit your changes to a new branch.

Name the new branch add-customers-model.

- Click the ... next to the

modelsdirectory, then select Create file. - Name the file

customers.sql, then click Create. - Copy the following query into the file and click Save.

with customers as (

select

id as customer_id,

first_name,

last_name

from jaffle_shop.customers

),

orders as (

select

id as order_id,

user_id as customer_id,

order_date,

status

from jaffle_shop.orders

),

customer_orders as (

select

customer_id,

min(order_date) as first_order_date,

max(order_date) as most_recent_order_date,

count(order_id) as number_of_orders

from orders

group by 1

),

final as (

select

customers.customer_id,

customers.first_name,

customers.last_name,

customer_orders.first_order_date,

customer_orders.most_recent_order_date,

coalesce(customer_orders.number_of_orders, 0) as number_of_orders

from customers

left join customer_orders using (customer_id)

)

select * from final

- Enter

dbt runin the command prompt at the bottom of the screen. You should get a successful run and see the three models.

Later, you can connect your business intelligence (BI) tools to these views and tables so they only read cleaned up data rather than raw data in your BI tool.

FAQs

Change the way your model is materialized

One of the most powerful features of dbt is that you can change the way a model is materialized in your warehouse, simply by changing a configuration value. You can change things between tables and views by changing a keyword rather than writing the data definition language (DDL) to do this behind the scenes.

By default, everything gets created as a view. You can override that at the directory level so everything in that directory will materialize to a different materialization.

-

Edit your

dbt_project.ymlfile.-

Update your project

nameto:dbt_project.ymlname: 'jaffle_shop' -

Configure

jaffle_shopso everything in it will be materialized as a table; and configureexampleso everything in it will be materialized as a view. Update yourmodelsconfig in the project YAML file to:dbt_project.ymlmodels:

jaffle_shop:

+materialized: table

example:

+materialized: view -

Click Save.

-

-

Enter the

dbt runcommand. Yourcustomersmodel should now be built as a table!infoTo do this, dbt had to first run a

drop viewstatement (or API call on BigQuery), then acreate table asstatement. -

Edit

models/customers.sqlto override thedbt_project.ymlfor thecustomersmodel only by adding the following snippet to the top, and click Save:models/customers.sql{{

config(

materialized='view'

)

}}

with customers as (

select

id as customer_id

...

) -

Enter the

dbt runcommand. Your model,customers, should now build as a view.- BigQuery users need to run

dbt run --full-refreshinstead ofdbt runto full apply materialization changes.

- BigQuery users need to run

-

Enter the

dbt run --full-refreshcommand for this to take effect in your warehouse.

FAQs

Delete the example models

You can now delete the files that dbt created when you initialized the project:

-

Delete the

models/example/directory. -

Delete the

example:key from yourdbt_project.ymlfile, and any configurations that are listed under it.dbt_project.yml# before

models:

jaffle_shop:

+materialized: table

example:

+materialized: viewdbt_project.yml# after

models:

jaffle_shop:

+materialized: table -

Save your changes.

FAQs

Build models on top of other models

As a best practice in SQL, you should separate logic that cleans up your data from logic that transforms your data. You have already started doing this in the existing query by using common table expressions (CTEs).

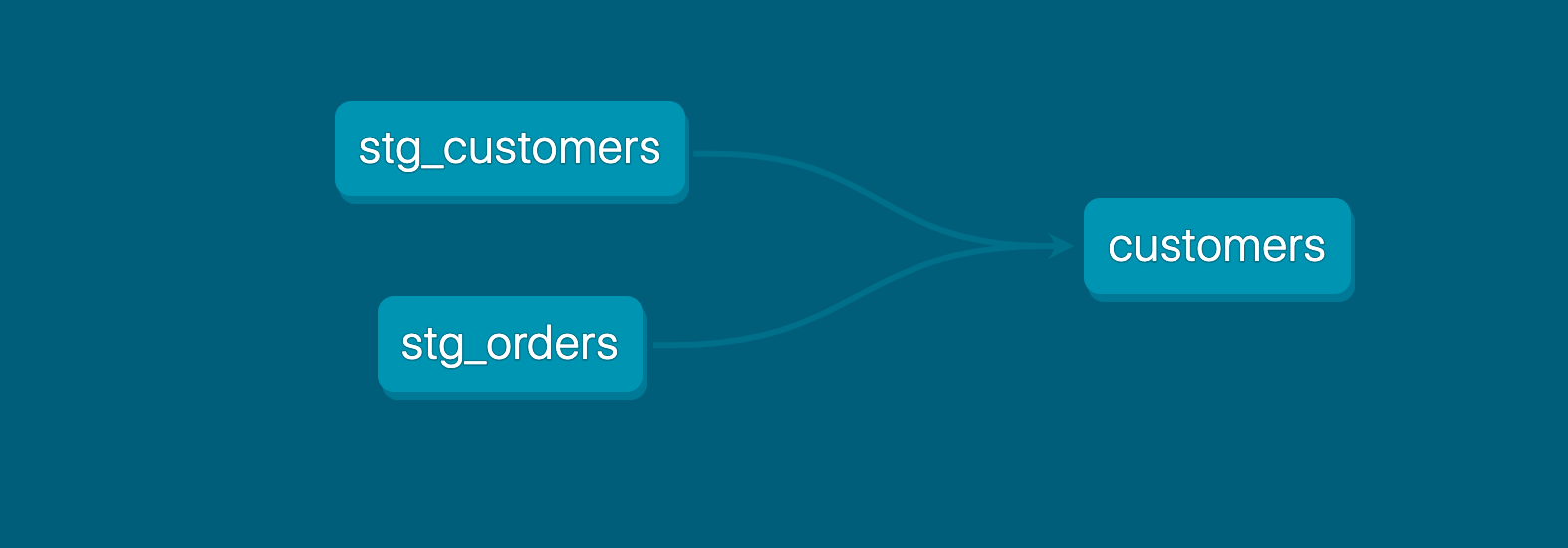

Now you can experiment by separating the logic out into separate models and using the ref function to build models on top of other models:

-

Create a new SQL file,

models/stg_customers.sql, with the SQL from thecustomersCTE in our original query. -

Create a second new SQL file,

models/stg_orders.sql, with the SQL from theordersCTE in our original query.models/stg_customers.sqlselect

id as customer_id,

first_name,

last_name

from jaffle_shop.customersmodels/stg_orders.sqlselect

id as order_id,

user_id as customer_id,

order_date,

status

from jaffle_shop.orders -

Edit the SQL in your

models/customers.sqlfile as follows:models/customers.sqlwith customers as (

select * from {{ ref('stg_customers') }}

),

orders as (

select * from {{ ref('stg_orders') }}

),

customer_orders as (

select

customer_id,

min(order_date) as first_order_date,

max(order_date) as most_recent_order_date,

count(order_id) as number_of_orders

from orders

group by 1

),

final as (

select

customers.customer_id,

customers.first_name,

customers.last_name,

customer_orders.first_order_date,

customer_orders.most_recent_order_date,

coalesce(customer_orders.number_of_orders, 0) as number_of_orders

from customers

left join customer_orders using (customer_id)

)

select * from final -

Execute

dbt run.This time, when you performed a

dbt run, separate views/tables were created forstg_customers,stg_ordersandcustomers. dbt inferred the order to run these models. Becausecustomersdepends onstg_customersandstg_orders, dbt buildscustomerslast. You do not need to explicitly define these dependencies.

FAQs

Add tests to your models

Adding data tests to a project helps validate that your models are working correctly.

To add data tests to your project:

-

Create a new YAML file in the

modelsdirectory, namedmodels/schema.yml -

Add the following contents to the file:

models/schema.ymlversion: 2

models:

- name: customers

columns:

- name: customer_id

data_tests:

- unique

- not_null

- name: stg_customers

columns:

- name: customer_id

data_tests:

- unique

- not_null

- name: stg_orders

columns:

- name: order_id

data_tests:

- unique

- not_null

- name: status

data_tests:

- accepted_values:

arguments: # available in v1.10.5 and higher. Older versions can set the <argument_name> as the top-level property.

values: ['placed', 'shipped', 'completed', 'return_pending', 'returned']

- name: customer_id

data_tests:

- not_null

- relationships:

arguments:

to: ref('stg_customers')

field: customer_id -

Run

dbt test, and confirm that all your tests passed.

When you run dbt test, dbt iterates through your YAML files, and constructs a query for each test. Each query will return the number of records that fail the test. If this number is 0, then the test is successful.

FAQs

Document your models

Adding documentation to your project allows you to describe your models in rich detail, and share that information with your team. Here, we're going to add some basic documentation to our project.

Update your models/schema.yml file to include some descriptions, such as those below.

version: 2

models:

- name: customers

description: One record per customer

columns:

- name: customer_id

description: Primary key

data_tests:

- unique

- not_null

- name: first_order_date

description: NULL when a customer has not yet placed an order.

- name: stg_customers

description: This model cleans up customer data

columns:

- name: customer_id

description: Primary key

data_tests:

- unique

- not_null

- name: stg_orders

description: This model cleans up order data

columns:

- name: order_id

description: Primary key

data_tests:

- unique

- not_null

- name: status

data_tests:

- accepted_values:

arguments: # available in v1.10.5 and higher. Older versions can set the <argument_name> as the top-level property.

values: ['placed', 'shipped', 'completed', 'return_pending', 'returned']

- name: customer_id

data_tests:

- not_null

- relationships:

arguments:

to: ref('stg_customers')

field: customer_id

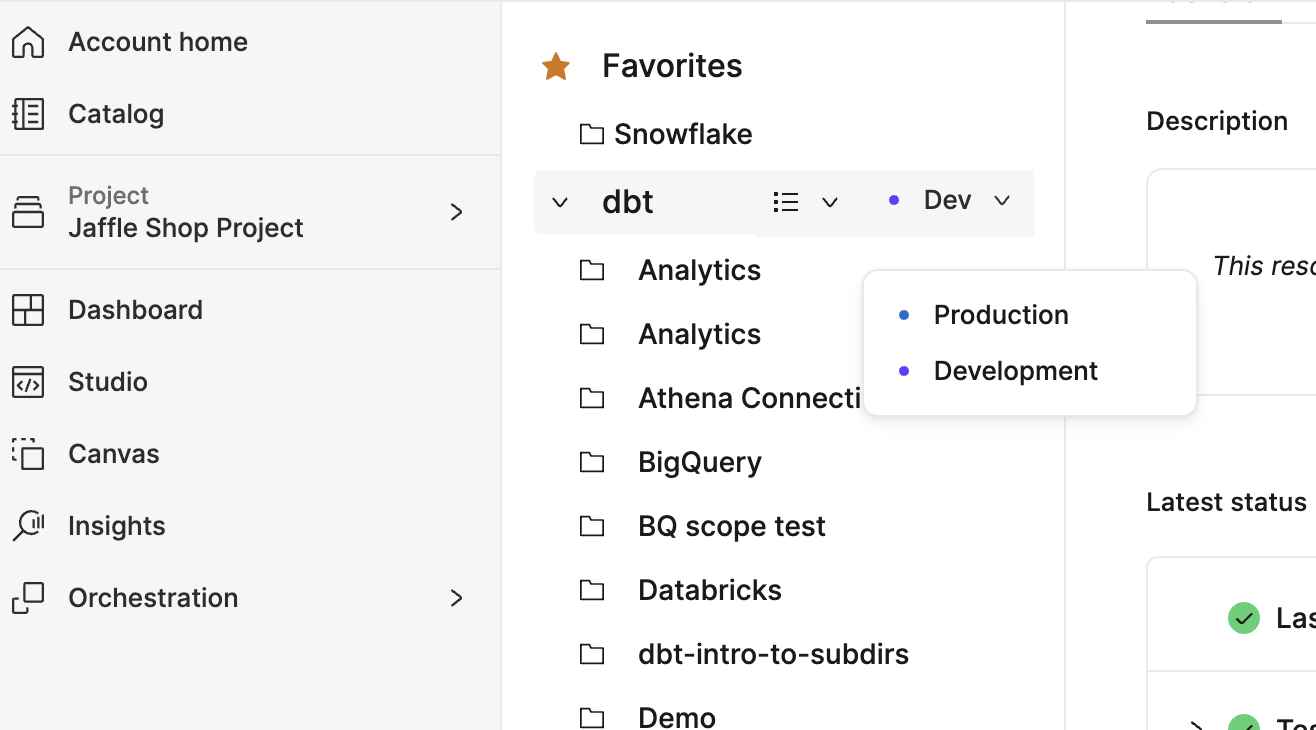

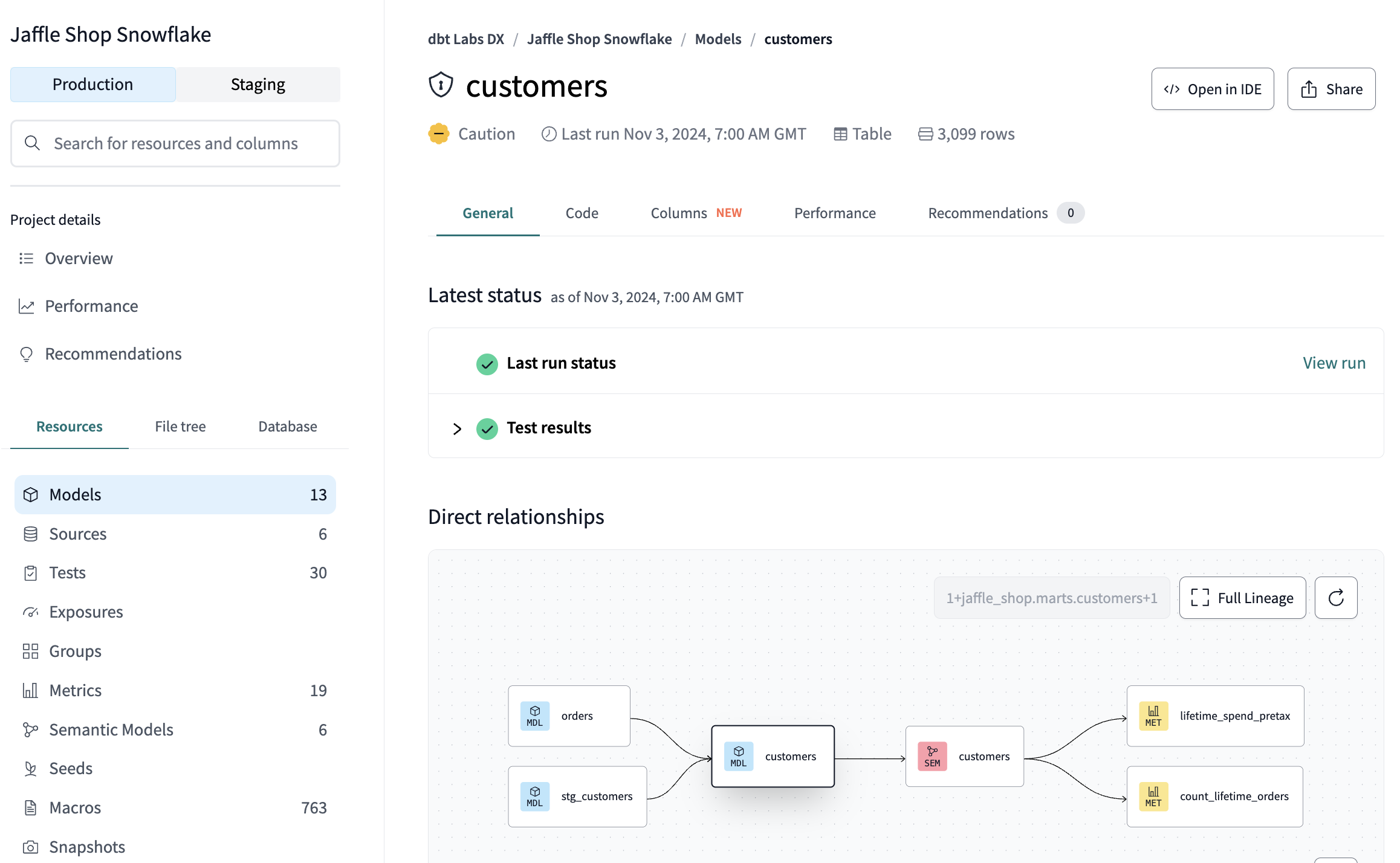

- View in Catalog

- View in Studio IDE

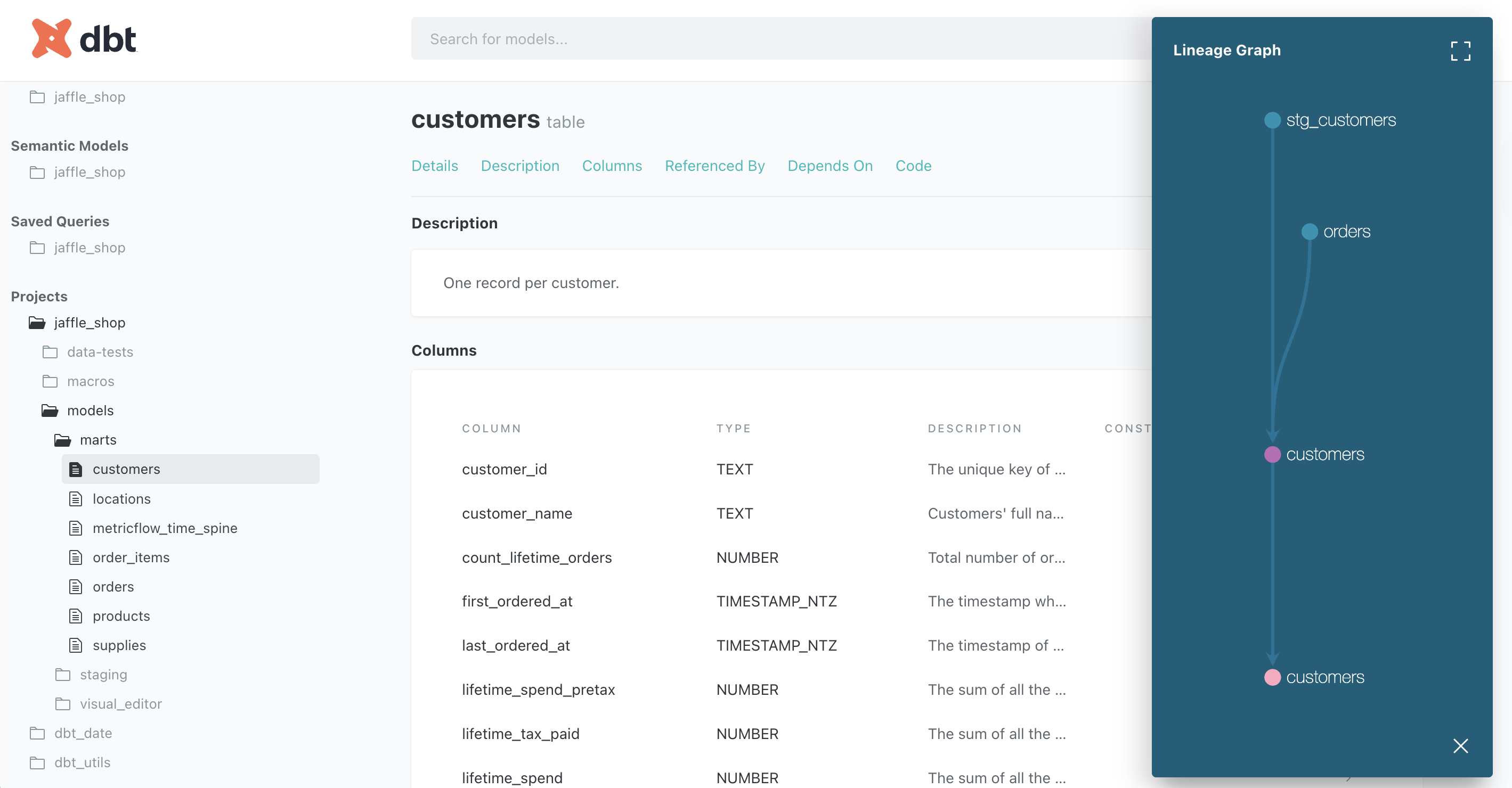

Catalog provides powerful tools to interact with your dbt projects, including documentation:

- From the IDE, run one of the following commands:

dbt docs generateif you're on dbt Coredbt buildif you're on the dbt Fusion Engine

- Click Catalog in the navigation menu to launch Catalog.

- In the Catalog pane, click the environment selection dropdown menu at the top of the file tree and change it from Production to Development.

- Select your project from the file tree.

- Use the search bar or browse the resource list to find the

customersmodel. - Click the model to view its details, including the descriptions you added.

Catalog displays your model's description, column documentation, data tests, and lineage graph. You can also see which columns are missing documentation and track test coverage across your project.

You can view docs directly from the IDE if you're on Latest or another version of dbt Core. Keep in mind that this is a legacy view and doesn't offer the same level of interactivity as Catalog.

- In the IDE, run

dbt docs generate. - From the navigation bar, click the View docs icon located to the right of the branch name.

- From Projects, select your project name and expand the folders.

- Click models > marts > customers.

FAQs

Commit your changes

Now that you've built your customer model, you need to commit the changes you made to the project so that the repository has your latest code.

If you edited directly in the protected primary branch:

- Click the Commit and sync git button. This action prepares your changes for commit.

- A modal titled Commit to a new branch will appear.

- In the modal window, name your new branch

add-customers-model. This branches off from your primary branch with your new changes. - Add a commit message, such as "Add customers model, tests, docs" and and commit your changes.

- Click Merge this branch to main to add these changes to the main branch on your repo.

If you created a new branch before editing:

- Since you already branched out of the primary protected branch, go to Version Control on the left.

- Click Commit and sync to add a message.

- Add a commit message, such as "Add customers model, tests, docs."

- Click Merge this branch to main to add these changes to the main branch on your repo.

Deploy dbt

Use dbt's Scheduler to deploy your production jobs confidently and build observability into your processes. You'll learn to create a deployment environment and run a job in the following steps.

Create a deployment environment

- From the main menu, go to Orchestration > Environments.

- Click Create environment.

- In the Name field, write the name of your deployment environment. For example, "Production."

- The dbt version will default to the latest available. We recommend all new projects run on the latest version of dbt.

- Under Deployment connection, enter the name of the dataset you want to use as the target, such as "Analytics". This will allow dbt to build and work with that dataset. For some data warehouses, the target dataset may be referred to as a "schema".

- Click Save.

Create and run a job

Jobs are a set of dbt commands that you want to run on a schedule. For example, dbt build.

As the jaffle_shop business gains more customers, and those customers create more orders, you will see more records added to your source data. Because you materialized the customers model as a table, you'll need to periodically rebuild your table to ensure that the data stays up-to-date. This update will happen when you run a job.

- After creating your deployment environment, you should be directed to the page for a new environment. If not, select Orchestration from the main menu, then click Jobs.

- Click Create job > Deploy job.

- Provide a job name (for example, "Production run") and select the environment you just created.

- Scroll down to the Execution settings section.

- Under Commands, add this command as part of your job if you don't see it:

dbt build

- Select the Generate docs on run option to automatically generate updated project docs each time your job runs.

- For this exercise, do not set a schedule for your project to run — while your organization's project should run regularly, there's no need to run this example project on a schedule. Scheduling a job is sometimes referred to as deploying a project.

- Click Save, then click Run now to run your job.

- Click the run and watch its progress under Run summary.

- Once the run is complete, click View Documentation to see the docs for your project.

Congratulations 🎉! You've just deployed your first dbt project!

FAQs

Was this page helpful?

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.