Python models

Note that only specific data platforms support dbt-py models. Check the platform configuration pages to confirm if Python models are supported.

Python models for Snowflake, BigQuery, and Databricks are supported in Fusion. Please refer to the supported features page to learn more about Fusion.

We encourage you to:

- Read the original discussion that proposed this feature.

- Share your thoughts and ideas on next steps for Python models.

- Join the #dbt Core-python-models channel in the dbt Community Slack.

Overview

dbt Python (dbt-py) models can help you solve use cases that can't be solved with SQL. You can perform analyses using tools available in the open-source Python ecosystem, including state-of-the-art packages for data science and statistics. Before, you would have needed separate infrastructure and orchestration to run Python transformations in production. Python transformations defined in dbt are models in your project with all the same capabilities around testing, documentation, and lineage.

import ...

def model(dbt, session):

my_sql_model_df = dbt.ref("my_sql_model")

final_df = ... # stuff you can't write in SQL!

return final_df

models:

- name: my_python_model

# Document within the same codebase

description: My transformation written in Python

# Configure in ways that feel intuitive and familiar

config:

materialized: table

tags: ['python']

# Test the results of my Python transformation

columns:

- name: id

# Standard validation for 'grain' of Python results

data_tests:

- unique

- not_null

data_tests:

# Write your own validation logic (in SQL) for Python results

- custom_generic_test

The prerequisites for dbt Python models include using an adapter for a data platform that supports a fully featured Python runtime when using dbt Core or Fusion engine. In a dbt Python model, all Python code is executed remotely on the platform. None of it is run by dbt locally. We believe in clearly separating model definition from model execution. In this and many other ways, you'll find that dbt's approach to Python models mirrors its longstanding approach to modeling data in SQL.

We've written this guide assuming that you have some familiarity with dbt. If you've never before written a dbt model, we encourage you to start by first reading dbt Models. Throughout, we'll be drawing connections between Python models and SQL models, as well as making clear their differences.

What is a Python model?

A dbt Python model is a function that reads in dbt sources or other models, applies a series of transformations, and returns a transformed dataset. DataFrame operations define the starting points, the end state, and each step along the way.

This is similar to the role of CTEs in dbt SQL models. We use CTEs to pull in upstream datasets, define (and name) a series of meaningful transformations, and end with a final select statement. You can run the compiled version of a dbt SQL model to see the data included in the resulting view or table. When you dbt run, dbt wraps that query in create view, create table, or more complex DDL to save its results in the database.

Instead of a final select statement, each Python model returns a final DataFrame. Each DataFrame operation is "lazily evaluated." In development, you can preview its data, using methods like .show() or .head(). When you run a Python model, the full result of the final DataFrame will be saved as a table in your data warehouse.

dbt Python models have access to almost all of the same configuration options as SQL models. You can test and document them, add tags and meta properties, and grant access to their results to other users. You can select them by their name, file path, configurations, whether they are upstream or downstream of another model, or if they have been modified compared to a previous project state.

Defining a Python model

Each Python model lives in a .py file in your models/ folder. It defines a function named model(), which takes two parameters:

dbt: A class compiled by dbt Core, unique to each model, enables you to run your Python code in the context of your dbt project and DAG.session: A class representing your data platform’s connection to the Python backend. The session is needed to read in tables as DataFrames, and to write DataFrames back to tables. In PySpark, by convention, theSparkSessionis namedspark, and available globally. For consistency across platforms, we always pass it into themodelfunction as an explicit argument calledsession.

The model() function must return a single DataFrame. On Snowpark (Snowflake), this can be a Snowpark or pandas DataFrame. On BigQuery this can be BigFrames, pandas or Spark datafame. Via PySpark (Databricks), this can be a Spark, pandas, or pandas-on-Spark DataFrame. For more information about choosing between pandas and native DataFrames, see DataFrame API + syntax.

When you dbt run --select python_model, dbt will prepare and pass in both arguments (dbt and session). All you have to do is define the function. This is how every single Python model should look:

def model(dbt, session):

...

return final_df

Referencing other models

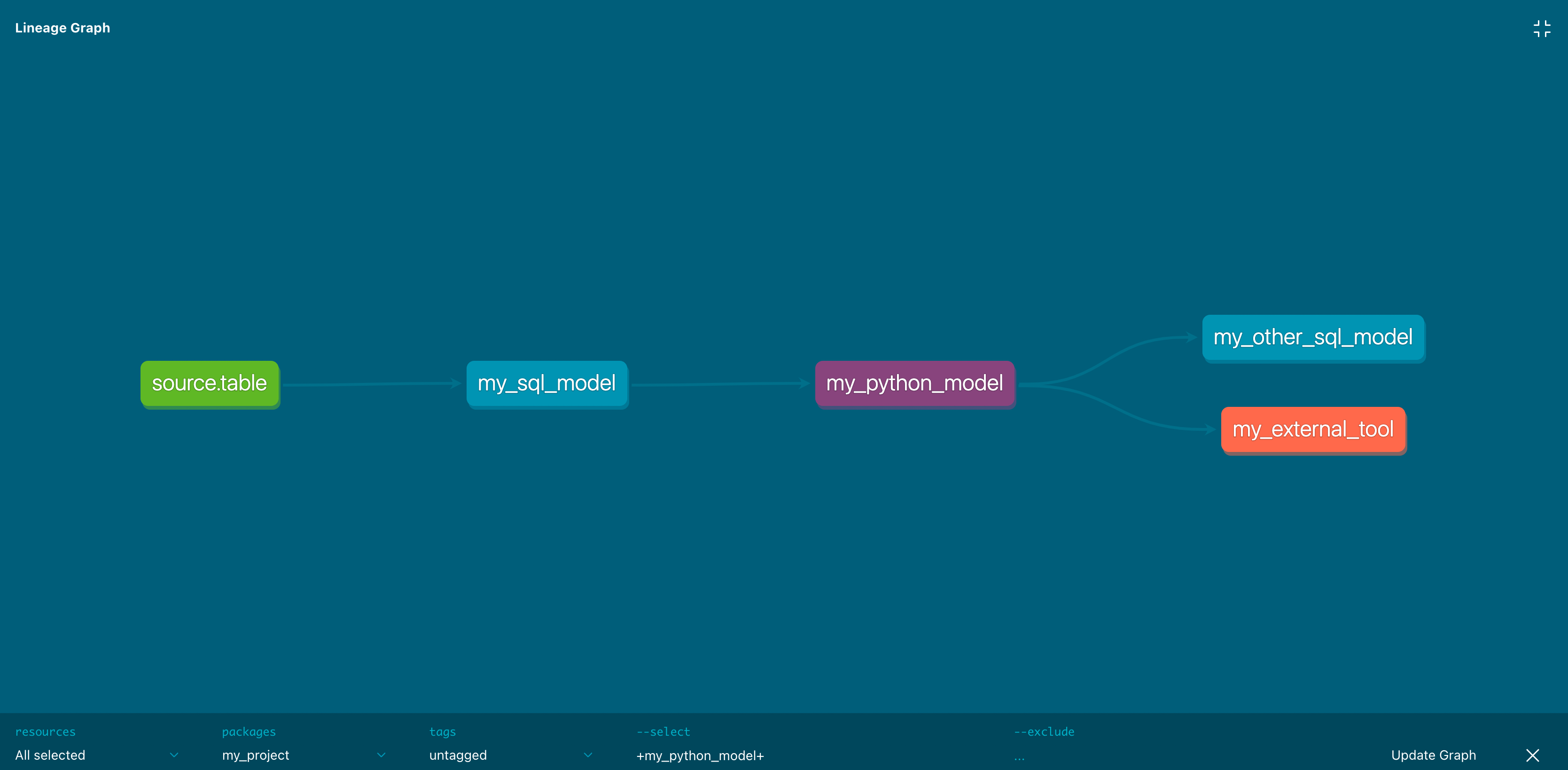

Python models participate fully in dbt's directed acyclic graph (DAG) of transformations. Use the dbt.ref() method within a Python model to read data from other models (SQL or Python). If you want to read directly from a raw source table, use dbt.source(). These methods return DataFrames pointing to the upstream source, model, seed, or snapshot.

def model(dbt, session):

# DataFrame representing an upstream model

upstream_model = dbt.ref("upstream_model_name")

# DataFrame representing an upstream source

upstream_source = dbt.source("upstream_source_name", "table_name")

...

Of course, you can ref() your Python model in downstream SQL models, too:

with upstream_python_model as (

select * from {{ ref('my_python_model') }}

),

...

Referencing ephemeral models is currently not supported (see feature request)

From dbt version 1.8, Python models also support dynamic configurations within Python f-strings. This allows for more nuanced and dynamic model configurations directly within your Python code. For example:

# Previously, attempting to access a configuration value like this would result in None

print(f"{dbt.config.get('my_var')}") # Output before change: None

# Now you can access the actual configuration value

# Assuming 'my_var' is configured to 5 for the current model

print(f"{dbt.config.get('my_var')}") # Output after change: 5

This also means you can use dbt.config.get() within Python models to ensure that configuration values are effectively retrievable and usable within Python f-strings.

Configuring Python models

Just like SQL models, there are three ways to configure Python models:

- In

dbt_project.yml, where you can configure many models at once - In a dedicated

.ymlfile, within themodels/directory - Within the model's

.pyfile, using thedbt.config()method

Calling the dbt.config() method will set configurations for your model within your .py file, similar to the {{ config() }} macro in .sql model files:

def model(dbt, session):

# setting configuration

dbt.config(materialized="table")

There's a limit to how complex you can get with the dbt.config() method. It accepts only literal values (strings, booleans, and numeric types) and dynamic configuration. Passing another function or a more complex data structure is not possible. The reason is that dbt statically analyzes the arguments to config() while parsing your model without executing your Python code. If you need to set a more complex configuration, we recommend you define it using the config property in a properties YAML file.

Accessing project context

dbt Python models don't use Jinja to render compiled code. Python models have limited access to global project contexts compared to SQL models. That context is made available from the dbt class, passed in as an argument to the model() function.

Out of the box, the dbt class supports:

- Returning DataFrames referencing the locations of other resources:

dbt.ref()+dbt.source() - Accessing the database location of the current model:

dbt.this()(also:dbt.this.database,.schema,.identifier) - Determining if the current model's run is incremental:

dbt.is_incremental - Accessing custom values stored in

meta:dbt.config.meta_get()

It is possible to extend this context by "getting" them with dbt.config.get() after they are configured in the model's config. The dbt.config.get() method supports dynamic access to configurations within Python models, enhancing flexibility in model logic. This includes inputs such as var, env_var, and target. If you want to use those values for the conditional logic in your model, we require setting them through a dedicated properties YAML file config:

models:

- name: my_python_model

config:

materialized: table

target_name: "{{ target.name }}"

specific_var: "{{ var('SPECIFIC_VAR') }}"

specific_env_var: "{{ env_var('SPECIFIC_ENV_VAR') }}"

Then, within the model's Python code, use the dbt.config.get() function to access values of configurations that have been set:

def model(dbt, session):

target_name = dbt.config.get("target_name")

specific_var = dbt.config.get("specific_var")

specific_env_var = dbt.config.get("specific_env_var")

orders_df = dbt.ref("fct_orders")

# limit data in dev

if target_name == "dev":

orders_df = orders_df.limit(500)

Accessing custom meta values

To store custom values, use the meta config. For example, if you have a model named my_python_model and you want to store custom values, you can do the following:

models:

- name: my_python_model

config:

meta:

custom_value: "111"

another_value: "abc"

Then access them in your Python model using the dbt.config.meta_get() method:

def model(dbt, session):

# Access custom values stored in meta directly

custom_value = dbt.config.meta_get("custom_value")

another_value = dbt.config.meta_get("another_value")

# Use your custom values in your model logic

orders_df = dbt.ref("fct_orders")

...

You can also retrieve meta values using dbt.config.get("meta"), which returns the entire meta dictionary. When using this approach, handle the case where meta might not be configured:

custom_value = dbt.config.get("meta", {}).get("custom_value")

Dynamic configurations

In addition to the existing methods of configuring Python models, you also have dynamic access to configuration values set with dbt.config() within Python models using f-strings. This increases the possibilities for custom logic and configuration management.

def model(dbt, session):

dbt.config(materialized="table")

# Dynamic configuration access within Python f-strings,

# which allows for real-time retrieval and use of configuration values.

# Assuming 'my_var' is set to 5, this will print: Dynamic config value: 5

print(f"Dynamic config value: {dbt.config.get('my_var')}")

Materializations

Python models support these materializations:

table(default)incremental

Incremental Python models support all the same incremental strategies as their SQL counterparts. The specific strategies supported depend on your adapter. As an example, incremental models are supported on BigQuery with Dataproc for the merge incremental strategy; the insert_overwrite strategy is not yet supported.

Python models can't be materialized as view or ephemeral. Python isn't supported for non-model resource types (like tests and snapshots).

For incremental models, like SQL models, you need to filter incoming tables to only new rows of data:

- Snowpark

- BigQuery DataFrames

- PySpark

import snowflake.snowpark.functions as F

def model(dbt, session):

dbt.config(materialized = "incremental")

df = dbt.ref("upstream_table")

if dbt.is_incremental:

# only new rows compared to max in current table

max_from_this = f"select max(updated_at) from {dbt.this}"

df = df.filter(df.updated_at >= session.sql(max_from_this).collect()[0][0])

# or only rows from the past 3 days

df = df.filter(df.updated_at >= F.dateadd("day", F.lit(-3), F.current_timestamp()))

...

return df

import datetime

def model(dbt, session):

dbt.config(materialized = "incremental")

bdf = dbt.ref("upstream_table")

if dbt.is_incremental:

# only new rows compared to max in current table

max_from_this = f"select max(updated_at) from {dbt.this}"

bdf = bdf[bdf['updated_at'] >= bpd.read_gbq(max_from_this).values[0][0]]

# or only rows from the past 3 days

bdf = bdf[bdf['updated_at'] >= datetime.date.today() - datetime.timedelta(days=3)]

...

return bdf

import pyspark.sql.functions as F

def model(dbt, session):

dbt.config(materialized = "incremental")

df = dbt.ref("upstream_table")

if dbt.is_incremental:

# only new rows compared to max in current table

max_from_this = f"select max(updated_at) from {dbt.this}"

df = df.filter(df.updated_at >= session.sql(max_from_this).collect()[0][0])

# or only rows from the past 3 days

df = df.filter(df.updated_at >= F.date_add(F.current_timestamp(), F.lit(-3)))

...

return df

Python-specific functionality

Defining functions

In addition to defining a model function, the Python model can import other functions or define its own. Here's an example on Snowpark, defining a custom add_one function:

def add_one(x):

return x + 1

def model(dbt, session):

dbt.config(materialized="table")

temps_df = dbt.ref("temperatures")

# warm things up just a little

df = temps_df.withColumn("degree_plus_one", add_one(temps_df["degree"]))

return df

Currently, Python functions defined in one dbt model can't be imported and reused in other models. Refer to Code reuse for the potential patterns being considered.

Using PyPI packages

You can also define functions that depend on third-party packages so long as those packages are installed and available to the Python runtime on your data platform.

In this example, we use the holidays package to determine if a given date is a holiday in France. The code below uses the pandas API for simplicity and consistency across platforms. The exact syntax, and the need to refactor for multi-node processing, still vary.

- Snowpark

- BigQuery DataFrames

- PySpark

import holidays

def is_holiday(date_col):

# Chez Jaffle

french_holidays = holidays.France()

is_holiday = (date_col in french_holidays)

return is_holiday

def model(dbt, session):

dbt.config(

materialized = "table",

packages = ["holidays"]

)

orders_df = dbt.ref("stg_orders")

df = orders_df.to_pandas()

# apply our function

# (columns need to be in uppercase on Snowpark)

df["IS_HOLIDAY"] = df["ORDER_DATE"].apply(is_holiday)

df["ORDER_DATE"].dt.tz_localize('UTC') # convert from Number/Long to tz-aware Datetime

# return final dataset (Pandas DataFrame)

return df

import holidays

def model(dbt, session):

dbt.config(submission_method="bigframes")

data = {

'id': [0, 1, 2],

'name': ['Brian Davis', 'Isaac Smith', 'Marie White'],

'birthday': ['2024-03-14', '2024-01-01', '2024-11-07']

}

bdf = bpd.DataFrame(data)

bdf['birthday'] = bpd.to_datetime(bdf['birthday'])

bdf['birthday'] = bdf['birthday'].dt.date

us_holidays = holidays.US(years=2024)

return bdf[bdf['birthday'].isin(us_holidays)]

import holidays

def is_holiday(date_col):

# Chez Jaffle

french_holidays = holidays.France()

is_holiday = (date_col in french_holidays)

return is_holiday

def model(dbt, session):

dbt.config(

materialized = "table",

packages = ["holidays"]

)

orders_df = dbt.ref("stg_orders")

df = orders_df.to_pandas_on_spark() # Spark 3.2+

# df = orders_df.toPandas() in earlier versions

# apply our function

df["is_holiday"] = df["order_date"].apply(is_holiday)

# convert back to PySpark

df = df.to_spark() # Spark 3.2+

# df = session.createDataFrame(df) in earlier versions

# return final dataset (PySpark DataFrame)

return df

Configuring packages

We encourage you to configure required packages and versions so dbt can track them in project metadata. This configuration is required for the implementation on some platforms. If you need specific versions of packages, specify them.

def model(dbt, session):

dbt.config(

packages = ["numpy==1.23.1", "scikit-learn"]

)

models:

- name: my_python_model

config:

packages:

- "numpy==1.23.1"

- scikit-learn

User-defined functions (UDFs)

You can use the @udf decorator or udf function to define an "anonymous" function and call it within your model function's DataFrame transformation. This is a typical pattern for applying more complex functions as DataFrame operations, especially if those functions require inputs from third-party packages.

You can also define SQL or Python UDFs as first-class resources under /functions with a matching YAML file. dbt builds them as part of the DAG, and you reference them from SQL using {{ function('my_udf') }}. These UDFs are reusable across tools (BI, notebooks, SQL clients) because they live in your warehouse.

- Snowpark

- BigQuery DataFrames

- PySpark

import snowflake.snowpark.types as T

import snowflake.snowpark.functions as F

import numpy

def register_udf_add_random():

add_random = F.udf(

# use 'lambda' syntax, for simple functional behavior

lambda x: x + numpy.random.normal(),

return_type=T.FloatType(),

input_types=[T.FloatType()]

)

return add_random

def model(dbt, session):

dbt.config(

materialized = "table",

packages = ["numpy"]

)

temps_df = dbt.ref("temperatures")

add_random = register_udf_add_random()

# warm things up, who knows by how much

df = temps_df.withColumn("degree_plus_random", add_random("degree"))

return df

Note: Due to a Snowpark limitation, it is not currently possible to register complex named UDFs within stored procedures and, therefore, dbt Python models. We are looking to add native support for Python UDFs as a project/DAG resource type in a future release. For the time being, if you want to create a "vectorized" Python UDF via the Batch API, we recommend either:

- Writing

create functioninside a SQL macro, to run as a hook or run-operation - Registering from a staged file within your Python model code

def model(dbt, session):

dbt.config(submission_method="bigframes")

# You can also use @bpd.udf

@bpd.remote_function(dataset='jialuo_test_us')

def my_func(x: int) -> int:

return x * 1100

data = {"int": [1, 2], "str": ['a', 'b']}

bdf = bpd.DataFrame(data=data)

bdf['int'] = bdf['int'].apply(my_func)

return bdf

import pyspark.sql.types as T

import pyspark.sql.functions as F

import numpy

# use a 'decorator' for more readable code

@F.udf(returnType=T.DoubleType())

def add_random(x):

random_number = numpy.random.normal()

return x + random_number

def model(dbt, session):

dbt.config(

materialized = "table",

packages = ["numpy"]

)

temps_df = dbt.ref("temperatures")

# warm things up, who knows by how much

df = temps_df.withColumn("degree_plus_random", add_random("degree"))

return df

Code reuse

To re-use a Python function across multiple dbt models, you can define Python UDFs under /functions with a matching YAML file. These UDFs live in your warehouse and can be reused across tools (BI, notebooks, SQL clients).

In the future, we're considering also adding support for Private Python packages. In addition to importing reusable functions from public PyPI packages, many data platforms support uploading custom Python assets and registering them as packages. The upload process looks different across platforms, but your code’s actual import looks the same.

- How can dbt help users when uploading or initializing private Python assets? Is this a new form of

dbt deps? - How can dbt support users who want to test custom functions? If defined as UDFs: "unit testing" in the database? If "pure" functions in packages: encourage adoption of

pytest?

💬 Discussion: "Python models: package, artifact/object storage, and UDF management in dbt"

DataFrame API and syntax

Over the past decade, most people writing data transformations in Python have adopted DataFrame as their common abstraction. dbt follows this convention by returning ref() and source() as DataFrames, and it expects all Python models to return a DataFrame.

A DataFrame is a two-dimensional data structure (rows and columns). It supports convenient methods for transforming that data and creating new columns from calculations performed on existing columns. It also offers convenient ways for previewing data while developing locally or in a notebook.

That's about where the agreement ends. There are numerous frameworks with their own syntaxes and APIs for DataFrames. The pandas library offered one of the original DataFrame APIs, and its syntax is the most common to learn for new data professionals. Most newer DataFrame APIs are compatible with pandas-style syntax, though few can offer perfect interoperability. This is true for BigQuery DataFrames, Snowpark, and PySpark, which have their own DataFrame APIs.

When developing a Python model, you will find yourself asking these questions:

Why pandas? — It's the most common API for DataFrames. It makes it easy to explore sampled data and develop transformations locally. You can “promote” your code as-is into dbt models and run it in production for small datasets.

Why not pandas? — Performance. pandas runs "single-node" transformations, which cannot benefit from the parallelism and distributed computing offered by modern data warehouses. This quickly becomes a problem as you operate on larger datasets. Some data platforms support optimizations for code written using pandas DataFrame API, preventing the need for major refactors. For example, pandas on PySpark offers support for 95% of pandas functionality, using the same API while still leveraging parallel processing.

- When developing a new dbt Python model, should we recommend pandas-style syntax for rapid iteration and then refactor?

- Which open source libraries provide compelling abstractions across different data engines and vendor-specific APIs?

- Should dbt attempt to play a longer-term role in standardizing across them?

💬 Discussion: "Python models: the pandas problem (and a possible solution)"

Limitations

Python models have capabilities that SQL models do not. They also have some drawbacks compared to SQL models:

-

Time and cost. Python models are slower to run than SQL models, and the cloud resources that run them can be more expensive. Running Python requires more general-purpose compute. That compute might sometimes live on a separate service or architecture from your SQL models. However: We believe that deploying Python models via dbt—with unified lineage, testing, and documentation—is, from a human standpoint, dramatically faster and cheaper. By comparison, spinning up separate infrastructure to orchestrate Python transformations in production and different tooling to integrate with dbt is much more time-consuming and expensive.

-

Syntax differences are even more pronounced. Over the years, dbt has done a lot, via dispatch patterns and packages such as

dbt_utils, to abstract over differences in SQL dialects across popular data warehouses. Python offers a much wider field of play. If there are five ways to do something in SQL, there are 500 ways to write it in Python, all with varying performance and adherence to standards. Those options can be overwhelming. As the maintainers of dbt, we will be learning from state-of-the-art projects tackling this problem and sharing guidance as we develop it. -

These capabilities are very new. As data warehouses develop new features, we expect them to offer cheaper, faster, and more intuitive mechanisms for deploying Python transformations. We reserve the right to change the underlying implementation for executing Python models in future releases. Our commitment to you is around the code in your model

.pyfiles, following the documented capabilities and guidance we're providing here. -

Lack of

print()support. The data platform runs and compiles your Python model without dbt's oversight. This means it doesn't display the output of commands such as Python's built-inprint()function in dbt's logs.

As a general rule, if there's a transformation you could write equally well in SQL or Python, we believe that well-written SQL is preferable: it's more accessible to a greater number of colleagues, and it's easier to write code that's performant at scale. If there's a transformation you can't write in SQL, or where ten lines of elegant and well-annotated Python could save you 1000 lines of hard-to-read Jinja-SQL, Python is the way to go.

Was this page helpful?

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.