User-defined functions

User-defined functions (UDFs) enable you to define and register custom functions in your warehouse. Like macros, UDFs promote code reuse, but they are objects in the warehouse so you can reuse the same logic in tools outside dbt, such as BI tools, data science notebooks, and more.

UDFs are particularly valuable for sharing logic across multiple tools, standardizing complex business calculations, improving performance for compute-intensive operations (since they're compiled and optimized by your warehouse's query engine), and version controlling custom logic within your dbt project.

dbt creates, updates, and renames UDFs as part of DAG execution. The UDF is built in the warehouse before the model that references it. Refer to listing and building UDFs for more info on how to build UDFs in your project.

Refer to Function properties or Function configurations for more information on the configs/properties for UDFs.

Prerequisites

-

Make sure you're using dbt platform's Latest Fusion or Latest release track or dbt Core v1.11.

-

Use one of the following adapters:

- dbt Core

- dbt Fusion engine

- BigQuery

- Snowflake

- Redshift

- Postgres

- Databricks

- BigQuery

- Snowflake

- Redshift

- Databricks

Additional languages (for example, Java, JavaScript, Scala) aren't currently supported when developing UDFs.

See the Limitations section below for the full list of currently supported UDF capabilities.

Defining UDFs in dbt

You can define SQL and Python UDFs in dbt. Python UDFs are supported in Snowflake and BigQuery when using dbt Core or Fusion.

Follow these steps to define UDFs in dbt:

-

Create a SQL or Python file under the

functionsdirectory. For example, this UDF checks if a string represents a positive integer:- SQL

- Python

Define a SQL UDF in a SQL file.

functions/is_positive_int.sql# syntax for BigQuery, Snowflake, and Databricks

REGEXP_INSTR(a_string, '^[0-9]+$')

# syntax for Redshift and Postgres

SELECT REGEXP_INSTR(a_string, '^[0-9]+$')Define a Python UDF in a Python file.

functions/is_positive_int.pyimport re

def main(a_string):

return 1 if re.search(r'^[0-9]+$', a_string or '') else 0Note: You can specify configs in a config block in the SQL file or in the corresponding properties YAML file in next step (Step 2).

-

Specify the function name and define the config, properties, return type, and optional arguments in a corresponding properties YAML file. For example:

- SQL

- Python

functions/schema.ymlfunctions:

- name: is_positive_int # required

description: My UDF that returns 1 if a string represents a naked positive integer (like "10", "+8" is not allowed). # optional

config:

schema: udf_schema

database: udf_db

volatility: deterministic

arguments: # optional

- name: a_string # required if arguments is specified

data_type: string # required if arguments is specified

description: The string that I want to check if it's representing a positive integer (like "10")

default_value: "'1'" # optional, available in Snowflake and Postgres

returns: # required

data_type: integer # requiredThe following configs are required when defining a Python UDF:

-

runtime_version— Specify the Python version to run. Supported values are: -

entry_point— Specify the Python function to be called.

For example:

functions/schema.ymlfunctions:

- name: is_positive_int # required

description: My UDF that returns 1 if a string represents a naked positive integer (like "10", "+8" is not allowed). # optional

config:

runtime_version: "3.11" # required

entry_point: main # required

schema: udf_schema

database: udf_db

volatility: deterministic

arguments: # optional

- name: a_string # required if arguments is specified

data_type: string # required if arguments is specified

description: The string that I want to check if it's representing a positive integer (like "10")

default_value: "'1'" # optional, available in Snowflake and Postgres

returns: # required

data_type: integer # requiredvolatility warehouse-specificSomething to note is that

volatilityis accepted in dbt for both SQL and Python UDFs, but the handling of it is warehouse-specific. BigQuery ignoresvolatilityand dbt displays a warning. In Snowflake,volatilityis applied when creating the UDF. Refer to volatility for more information. -

Run one of the following

dbt buildcommands to build your UDFs and create them in the warehouse:Build all UDFs:

dbt build --select "resource_type:function"Or build a specific UDF:

dbt build --select is_positive_intWhen you run

dbt build, both thefunctions/schema.ymlfile and the corresponding SQL or Python file (for example,functions/is_positive_int.sqlorfunctions/is_positive_int.py) work together to generate theCREATE FUNCTIONstatement.The rendered

CREATE FUNCTIONstatement depends on which adapter you're using. For example:- SQL

- Python

- Snowflake

- Redshift

- BigQuery

- Databricks

- Postgres

CREATE OR REPLACE FUNCTION udf_db.udf_schema.is_positive_int(a_string STRING DEFAULT '1')

RETURNS INTEGER

LANGUAGE SQL

IMMUTABLE

AS $$

REGEXP_INSTR(a_string, '^[0-9]+$')

$$;CREATE OR REPLACE FUNCTION udf_db.udf_schema.is_positive_int(a_string VARCHAR)

RETURNS INTEGER

IMMUTABLE

AS $$

SELECT REGEXP_INSTR(a_string, '^[0-9]+$')

$$ LANGUAGE SQL;CREATE OR REPLACE FUNCTION udf_db.udf_schema.is_positive_int(a_string STRING)

RETURNS INT64

AS (

REGEXP_INSTR(a_string, r'^[0-9]+$')

);CREATE OR REPLACE FUNCTION udf_db.udf_schema.is_positive_int(a_string STRING)

RETURNS INT

DETERMINISTIC

RETURN REGEXP_INSTR(a_string, '^[0-9]+$');CREATE OR REPLACE FUNCTION udf_schema.is_positive_int(a_string text DEFAULT '1')

RETURNS int

LANGUAGE sql

IMMUTABLE

AS $$

SELECT regexp_instr(a_string, '^[0-9]+$')

$$;- Snowflake

- BigQuery

CREATE OR REPLACE FUNCTION udf_db.udf_schema.is_positive_int(a_string STRING DEFAULT '1')

RETURNS INTEGER

LANGUAGE PYTHON

RUNTIME_VERSION = '3.11'

HANDLER = 'main'

AS $$

import re

def main(a_string):

return 1 if re.search(r'^[0-9]+$', a_string or '') else 0

$$;CREATE OR REPLACE FUNCTION udf_db.udf_schema.is_positive_int(a_string STRING)

RETURNS INT64

LANGUAGE python

OPTIONS(runtime_version="python-3.11", entry_point="main")

AS r'''

import re

def main(a_string):

return 1 if re.search(r'^[0-9]+$', a_string or '') else 0

'''; -

Reference the UDF in a model using the

{{ function(...) }}macro. For example:models/my_model.sqlselect

maybe_positive_int_column,

{{ function('is_positive_int') }}(maybe_positive_int_column) as is_positive_int

from {{ ref('a_model_i_like') }} -

Run

dbt compileto see how the UDF is referenced. In the following example, the{{ function('is_positive_int') }}is replaced by the UDF nameudf_db.udf_schema.is_positive_int.models/my_model.sqlselect

maybe_positive_int_column,

udf_db.udf_schema.is_positive_int(maybe_positive_int_column) as is_positive

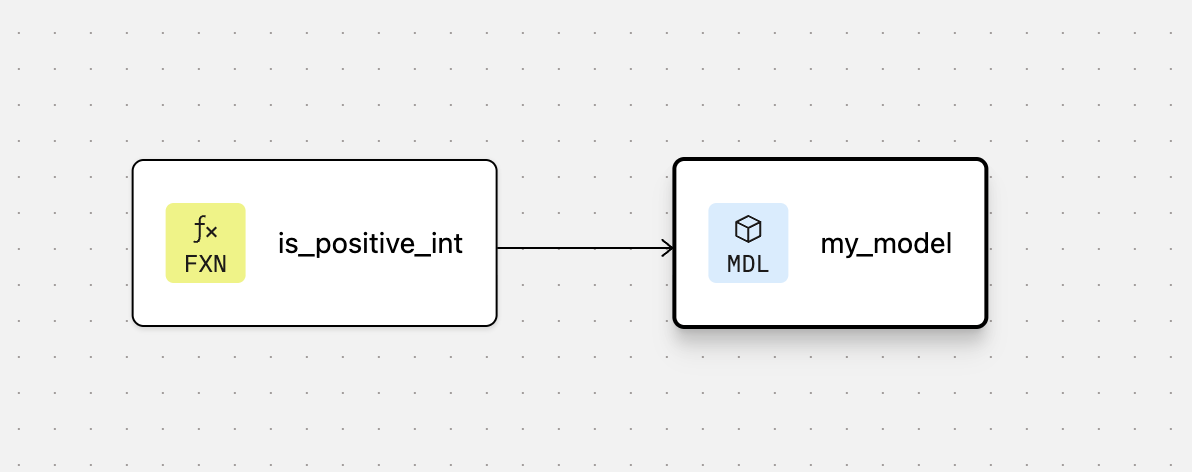

from analytics.dbt_schema.a_model_i_likeIn your DAG, a UDF node is created from the SQL/Python and YAML definitions, and there will be a dependency between

is_positive_int→my_model.

After defining a UDF, if you update the SQL/Python file that contains its function body (is_positive_int.sql or is_positive_int.py in this example) or its configurations, your changes will be applied to the UDF in the warehouse next time you build.

Using UDFs in unit tests

You can use unit tests to validate models that reference UDFs. Before running unit tests, make sure the function exists in your warehouse. To ensure that the function exists for a unit test, run:

dbt build --select "+my_model_to_test" --empty

Following the example in Defining UDFs in dbt, here's an example of a unit test that validates a model that calls a UDF:

unit_tests:

- name: test_is_positive_int

description: "Check my is_positive_int logic captures edge cases"

model: my_model

given:

- input: ref('a_model_i_like')

rows:

- { maybe_positive_int_column: 10 }

- { maybe_positive_int_column: -4 }

- { maybe_positive_int_column: +8 }

- { maybe_positive_int_column: 1.0 }

expect:

rows:

- { maybe_positive_int_column: 10, is_positive: true }

- { maybe_positive_int_column: -4, is_positive: false }

- { maybe_positive_int_column: +8, is_positive: true }

- { maybe_positive_int_column: 1.0, is_positive: true }

Listing and building UDFs

Use the list command to list UDFs in your project: dbt list --select "resource_type:function" or dbt list --resource-type function.

Use the build command to select UDFs when building a project: dbt build --select "resource_type:function".

For more information about selecting UDFs, see the examples in Node selector methods.

Limitations

- Creating UDFs in other languages (for example, Java, JavaScript, or Scala) is not yet supported.

- Python UDFs are supported in Snowflake and BigQuery only (when using dbt Core or Fusion). Other warehouses aren't yet supported for Python UDFs.

- Only scalar and aggregate functions are currently supported. For more information, see Supported function types.

Related FAQs

Was this page helpful?

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.